Aug

The Future Is Here

“Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.”

With just 22 words, we are ushered into a future once heralded in science fiction movies and literature of the past, a future our collective consciousness anticipated but has now taken us by surprise upon the realization of our unreadiness. It is a future where machines are intelligent enough to replicate a growing number of significant and specialized tasks. A future where machines are intelligent enough to not only threaten to replace the human workforce but humanity itself.

Published by the San Francisco-based Center for AI Safety, this 22-word statement was co-signed by leading tech figures such as Google DeepMind CEO Demis Hassabis and OpenAI CEO Sam Altman. Both have also expressed calls for caution before, joining the ranks of other tech specialists and executives like Elon Musk and Steve Wozniak.

Earlier in the year, Musk, Wozniak, and other tech leaders and experts endorsed an open letter proposing a six-month halt on AI research and development. The suggested pause is presumed to allow for time to determine and implement AI safety standards and protocols.

Max Tegmark, physicist and AI researcher at the Massachusetts Institute of Technology and co-founder of the Future of Life Institute, once held an optimistic view of the possibilities granted by AI but has now recently issued a warning. He remarked that humanity is failing this new technology’s challenge by rushing into the development and release of AI systems that aren’t fully understood or completely regulated.

Henry Kissinger himself co-wrote a book on the topic. In The Age of AI, Kissinger warned us about AI eventually becoming capable of making conclusions and decisions no human is able to consider or understand. This is a notion made more unsettling when taken into the context of everyday life and warfare.

Working With AI

We at Cascade Strategies wholeheartedly agree with this now emerging consensus and additionally, we believe that we’ve been obedient in upholding the responsible and conscientious use of AI. Not only have we long been advocating for the “Appropriate Use” of AI, but we’ve also made it a hallmark of how we find solutions for our client’s needs with market research and brand management.

Just consider the work we’ve done with the Expedia Group. For years, they’ve utilized a segmentation model to engage with their lodging partners by offering advice that could lead to the partner winning a booking over a competitor. AI filters through the thousands of possible recommendations to arrive at a shortlist of the best selections optimized for revenue.

With the continued growth and diversification of their partners, they then needed a more effective approach in engaging and appealing to them, something that focuses more on that associate’s behavior and motivations. We came up with two things for Expedia: a psychographic segmentation formed into subgroups based on patterns of thinking, feeling, and perceiving to explain and predict behavior, and more importantly, a Scenario Analyzer that utilizes the underlying AI model but now delivers recommendations in very action-oriented and compelling messaging tailor-fit for that specific partner.

The best part about the Scenario Analyzer is whether the partner follows any of the advice recommended or does nothing, Expedia still stands to make a profit while maintaining an image of personalized attentiveness to their partner’s needs. And ultimately, it’s the partner who gets to decide, not the AI.

Copyright Tara Winstead

Our Future With AI

This is how we view and approach AI- it’s not the end-all, be-all solution but rather an essential tool in increasing productivity and efficiency in tandem with excellent human thinking, judgment, and creativity. Yes, it is going to be part of our future but in line with the new consensus, we believe that AI shaped by human values and experience is the way to go with this emerging and exciting technology.

Jul

What To Make Of ChatGPT’s User Growth Decline

jerry9789 0 comments artificial intelligence, Burning Questions, Uncategorized

The Beginning Of The End?

More than six months after launching on November 2022, ChatGPT recorded its first decline in user growth and traffic in June 2023. Spiceworks reported that the Washington Post surmised quality issues and summer breaks from schools could have been factors in the decline, aside from multiple companies banning employees from using ChatGPT professionally.

Brad Rudisail, another Spiceworks writer, opined that a subset of curious visitors driven by the hype over ChatGPT could’ve also boosted the numbers of early visits, the dwindling user growth resulting from the said group moving on to the next talk of the town.

The same article also brings up open-source AI gaining ground on OpenAI’s territory as a possible factor, thanks to customizable, faster, and more useful models on top of being more transparent and the decreased likelihood of cognitive biases.

Don’t Buy Into The Hype

But perhaps the best takeaway is Mr. Rudisail’s point that we’re still in the early stages of AI and it’s premature to herald ChatGPT’s downfall with a weak signal like decreased user growth. For all we know, this is what could be considered normal numbers, with earlier figures inflated by the excitement surrounding its launch. Don’t buy into the hype is a position we at Cascade Strategies advocate when it comes to matters of AI.

The advent of AI has taken productivity and efficiency to levels never seen before, so the initial hoopla over it is understandable. However, we believe people are now starting to become a little more settled in their appraisal of AI. They’re starting to see that AI is pretty good at “middle functions” requiring intelligence, whether that be human or machine-based. But when it comes to “higher function” tasks which involve discernment, abstraction and creativity, AI output falls short of excellence. Sometimes mediocrity is acceptable, but for most pursuits excellence is needed.

Excellence Achieved Through High Level Human Thinking

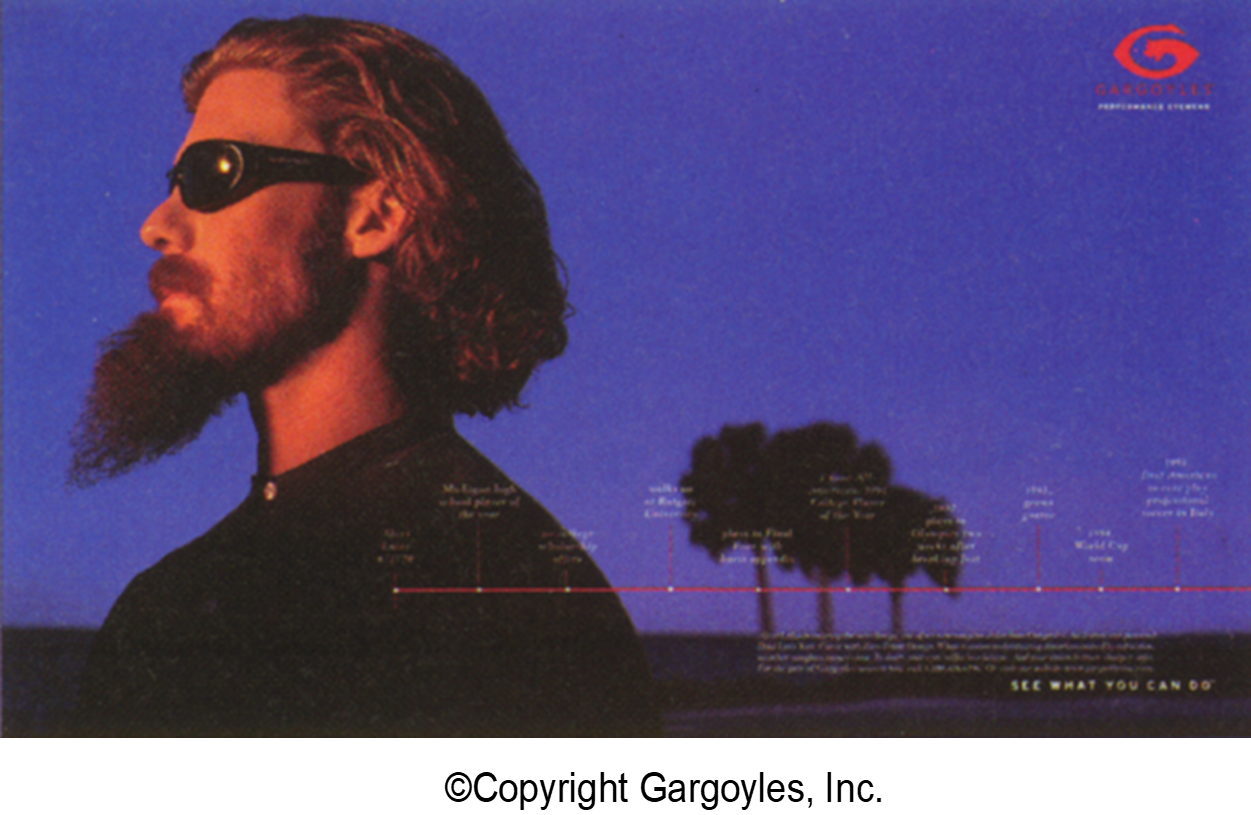

To illustrate just how AI would come out lacking in certain activities, let’s consider our case study for the Gargoyles brand of sunglasses. ChatGPT can produce a large number of ads for sunglasses at little or no cost, but most of those ads won’t bring anything new to the table or resonate with the audience.

However, when researchers spent time with the most loyal customers of Gargoyles to come up with a new ad, they discovered a commonality that AI simply did not have the power to discern. They found a unique quality of indomitability among these brand loyalists: many of them had been struck down somewhere in their upward striving, and they found the strength and resolve to keep going while the odds were clearly against them. They kept going and prevailed. The researchers were tireless in their pursuit of this rare trait, and they stretched the interpretive, intuitive, and synthesis-building capacities of their right brains to find it. Stretching further, they inspired creative teams to produce the award-winning “storyline of life” campaign for the Gargoyles brand.

All told, this is a story of seeking excellence, where hard-working humans press the ordinary capacities of their intellects to higher layers of understanding of a subject matter, not settling for simply a summarization of the aggregate human experience on the topic. This is what excellence is all about, and AI is not prepared to do it. To achieve it, humans have to have a strong desire to go beyond the mediocre. They have to believe that stretching their brains to this level results in something good.

How To Make “Appropriate Use” of AI

But that is not to say that AI and high level human thinking can’t mix. The key is to recognize where AI would best fit in your process and methodologies, then decide where human intervention comes in. This is what we call “Appropriate Use” of AI.

Take for example our case study for Expedia Group and how they engage with millions of hospitality partners. Expedia offers their partner “advice” which helps them receive a booking over their competitors. With thousands of pieces of advice to give their partners, they utilize AI to filter through all those recommendations and present only the best ones to optimize revenue. Cascade Strategies has helped them further by creating a tool called Scenario Analyzer, which uses the underlying AI model to automate the selection of these most revenue-optimal pieces of advice.

Either way, the end decision on which advice to go with (or whether they accept any advice at all) ultimately still comes from Expedia’s partner, not the AI.

Copyright ClaudeAI.uk

A Double-edged Sword

As you can see with ChatGPT, it’s easy to get carried away with all the hype surrounding AI. At launch, it was acclaimed for the exciting possibilities it represented, but now that it has hit a bump in the road, some people and outlets act as if ChatGPT is on its last leg. Hype is good when it’s necessary to draw attention; unfortunately in most cases, it sets up the loftiest of expectations when good sense gets overridden.

This is why we think a sensible mindset is the best way to approach and think about AI — to see it for what it really is. It’s a tool for increasing productivity and efficiency, not the end-all and be-all, as there is still much room for excellent human thinking backed by experience and values to come into play. Our concerns for now may not be as profound and dire as those expressed by James Cameron, Elon Musk, Steve Wozniak and others, but we’d like to believe that “appropriate use” of AI is the key towards better understanding and responsible stewardship of this emerging new technology.

Jun

Appropriate Use of AI

jerry9789 0 comments artificial intelligence, Brand Surveys and Testing, Brandview World, Burning Questions

The Rise Of AI

Believe it or not, Artificial Intelligence has existed for more than 50 years. But as the European Parliament pointed out, it wasn’t until recent advances in computing power, algorithm and data availability accelerated breakthroughs in AI technologies in modern times. 2022 alone made AI relatively mainstream with the sudden popularity of OpenAI’s ChatGPT.

But that’s not to say that AI hasn’t already been incorporated in our daily lives- from web searches to online shopping and advertising, from digital assistants on your smartphones to self-driving vehicles, from cybersecurity to the fight against disinformation on social media, AI-powered applications have been employed to enable automation and increase productivity.

The Woes Of AI

However, the rise of AI also brings concerns and worries over its expanding use across industries and day-to-day activities. Perceived negative socio-political effects, the threat of AI-powered processes taking over human employment, the advent of intelligent machines capable of evolving past their programming and human supervision- that last one is mostly inspired by the realm of science fiction but a plausible possibility nonetheless. A more grounded and present-day concern, however, is the overreliance and misuse of Artificial Intelligence.

Copyright geralt (Pixabay)

Sure, AI is able to perform a variety of simple and complex tasks by simulating human intelligence, efficiently and quickly producing objective and accurate results. However, there are some activities requiring discernment, abstraction and creativity, where AI’s approximation of human thinking falls short. Cognitive exercises like these not only need high-level thinking but also involve value judgments honed and subjected by human experience.

The Expedia Group Case Study

This brings us to our case study for the Expedia Group, whose brand has around a million hospitality partners. Their goal is to increase engagement with their partners. For five years, Expedia grouped their lodging partners, which at the time were mostly chain hotels, with a segmentation model that helped guide their partner sales teams on how they should prioritize spending their time. This “advice” Expedia provides comes through marketing, in-product or through the partner’s account manager. When a partner takes advantage of Expedia’s advice, they usually receive the booking over their competitor.

Copyright geralt (Pixabay)

Now you can imagine that Expedia has thousands of advices or recommendations to give their partners. So how does Expedia determine which recommendation will most likely push their partner to act accordingly and produce optimal revenue?

If you answered “Use AI,” you’re on the right track. With thousands of possible decisions, Expedia just wants AI to filter the bad choices and boil it down to a few but good recommendations optimizing revenue. Expedia wants to use AI to help with decisions, but it doesn’t want AI to make that decision for them or their partners.

Copyright Seanbatty (Pixabay)

But now things are different- Expedia’s partners have grown to also include independent hotels and vacation rentals. So what if Expedia adds additional dimensions to the model allowing them to target partners with recommendations that would be best for their way of thinking and feeling, as well as appeal to their primary motivations as a property?

So that’s exactly where Cascade Strategies stepped in. We followed a disciplined process where — just to name a few things we’ve performed — we interviewed 1200 partners and prospects across 10 countries in 4 regions, converted emotional factors into numeric values and used advanced forms of Machine Learning to arrive at optimal segmentation solutions. Through this five-step disciplined process, we built them a psychographic segmentation formed into subgroups based on patterns of thinking, feeling and perceiving to explain and predict behavior.

Copyright Pavel Danilyuk

It “conceived the game anew” for Expedia Group (in a way suggested by Eric Schmidt and company in their book The Age of AI: And Our Human Future). Now seeing their partners in a different light, they needed to evolve their communications to reflect the new way they view them with the end goal of targeting which segment with which offer. The messages they would deploy should be very action-oriented based on what compels each segment.

Cascade Strategies then created an application called Scenario Analyzer to make this easy for people at Expedia. Its users could just ask the Scenario Analyzer what’s the optimal decision for certain input conditions. Basically, a marketer selects a target group and a region then the Scenario Analyzer answers by saying “You could do any of these six things and you’d make some money. It’s your call.”

If the partner does nothing, Expedia still makes about $1.5 million from these partners during a 90-day period, which is part of their regular business momentum. However, if the partner acts on the top-ranked recommendation which carries the message “Maximize your revenue potential by driving more groups or corporate business to your property,” it would result in about $140,000 more during the same period, which is about a 1% gain. While we couldn’t reach all partners with the same message, causing us to lower our expectations a little, we did slightly better than we expected to do in the end.

The “Appropriate Use” of AI

So what did we did do? We made “Appropriate Use” of AI. It neither made the decision nor guaranteed the money. It warded off the worst ideas and told us which recommendation was best in comparative terms.

Many people in marketing are treating AI as the next cool thing, so they want to jam it in wherever they can, whether it’s helpful or not. “Appropriate Use” stands against that, saying the best way to apply AI to marketing is for Decision Support to remain under human discretion and judgment, instead of letting AI actually make choices.

We think AI can at times be a very poor decision maker but a very good advisor. And we’re not alone as many others share our concern; to illustrate, 61% of Europeans look favorably at AI and robots while 88% say these technologies require careful management.

Another example to consider when thinking about just how important human intervention is when it comes to the “Appropriate Use” of AI is the topic of health care. As noted by frontiersin.org, the legal and regulatory framework may not be well-developed for the practice of medicine and public health in some parts of the world. Throwing artificial intelligence into the mix without careful and thoughtful planning might underscore or aggravate existing health disparities among different demographic groups.

And this is part of the reason why we believe in shaping AI with human values, including the dignity and moral agency of humans. The “defining future technology” that is AI is already proving to be a powerful tool for providing solutions and achieving goals, but it can only unlock levels of excellence, innovation and integrity when guided appropriately by human values and experience.

Other interesting reads:

https://www.wgu.edu/blog/what-ai-technology-how-used2003.html#close

https://www.investopedia.com/terms/a/artificial-intelligence-ai.asp