Aug

The Future Is Here

“Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.”

With just 22 words, we are ushered into a future once heralded in science fiction movies and literature of the past, a future our collective consciousness anticipated but has now taken us by surprise upon the realization of our unreadiness. It is a future where machines are intelligent enough to replicate a growing number of significant and specialized tasks. A future where machines are intelligent enough to not only threaten to replace the human workforce but humanity itself.

Published by the San Francisco-based Center for AI Safety, this 22-word statement was co-signed by leading tech figures such as Google DeepMind CEO Demis Hassabis and OpenAI CEO Sam Altman. Both have also expressed calls for caution before, joining the ranks of other tech specialists and executives like Elon Musk and Steve Wozniak.

Earlier in the year, Musk, Wozniak, and other tech leaders and experts endorsed an open letter proposing a six-month halt on AI research and development. The suggested pause is presumed to allow for time to determine and implement AI safety standards and protocols.

Max Tegmark, physicist and AI researcher at the Massachusetts Institute of Technology and co-founder of the Future of Life Institute, once held an optimistic view of the possibilities granted by AI but has now recently issued a warning. He remarked that humanity is failing this new technology’s challenge by rushing into the development and release of AI systems that aren’t fully understood or completely regulated.

Henry Kissinger himself co-wrote a book on the topic. In The Age of AI, Kissinger warned us about AI eventually becoming capable of making conclusions and decisions no human is able to consider or understand. This is a notion made more unsettling when taken into the context of everyday life and warfare.

Working With AI

We at Cascade Strategies wholeheartedly agree with this now emerging consensus and additionally, we believe that we’ve been obedient in upholding the responsible and conscientious use of AI. Not only have we long been advocating for the “Appropriate Use” of AI, but we’ve also made it a hallmark of how we find solutions for our client’s needs with market research and brand management.

Just consider the work we’ve done with the Expedia Group. For years, they’ve utilized a segmentation model to engage with their lodging partners by offering advice that could lead to the partner winning a booking over a competitor. AI filters through the thousands of possible recommendations to arrive at a shortlist of the best selections optimized for revenue.

With the continued growth and diversification of their partners, they then needed a more effective approach in engaging and appealing to them, something that focuses more on that associate’s behavior and motivations. We came up with two things for Expedia: a psychographic segmentation formed into subgroups based on patterns of thinking, feeling, and perceiving to explain and predict behavior, and more importantly, a Scenario Analyzer that utilizes the underlying AI model but now delivers recommendations in very action-oriented and compelling messaging tailor-fit for that specific partner.

The best part about the Scenario Analyzer is whether the partner follows any of the advice recommended or does nothing, Expedia still stands to make a profit while maintaining an image of personalized attentiveness to their partner’s needs. And ultimately, it’s the partner who gets to decide, not the AI.

Copyright Tara Winstead

Our Future With AI

This is how we view and approach AI- it’s not the end-all, be-all solution but rather an essential tool in increasing productivity and efficiency in tandem with excellent human thinking, judgment, and creativity. Yes, it is going to be part of our future but in line with the new consensus, we believe that AI shaped by human values and experience is the way to go with this emerging and exciting technology.

Aug

How Can Healthcare Companies Identify Who Needs Remediation Programs?

jerry9789 0 comments artificial intelligence, Burning Questions

What Is Remediation?

The Cambridge Dictionary defines remediation as “the process of improving or correcting a situation.” Remediation programs are commonly employed in teaching and education wherein they address learning gaps by reteaching basic skills with a focus on core areas like reading and math. And as pointed out in an understood.org article, remedial programs are expanding in many places in our post-COVID 19 world.

In healthcare, there’s a wide range of remediation programs, or “remedial care,” diversified based on their end goal which may include smoking cessation, anti-obesity, weight reduction, diet improvement, exercise, heart-healthy living, alcoholism treatment, drug treatment, and more. But how do you identify the people who need remedial care the most?

Who Needs Remediation?

You might say you can tell who needs remedial care by just looking at the physical aspect of the prospective patient, but this is a shortsighted answer to the question. And what about those who need remedial care for a heart-healthy lifestyle? Surely you can’t tell a likely candidate for this remediation program with just one look alone.

It goes deeper than that. What if you, a healthcare representative, could only devote remedial care to a select few individuals given limited resources and time but you want to make sure that the whole remediation program is successful by achieving its intended goals? Just imagine all that time, effort and resources spent only for the patient to relapse back into their old ways not too long after program completion- or even in the middle of the remediation process itself.

Deep Learning and Remediation

This is where deep learning comes in. Also known as hierarchical learning or deep structured learning, Health IT Analytics defines deep learning as a type of machine learning that uses a layered algorithmic architecture to analyze data. In deep learning models, data is filtered through a cascade of multiple layers, with each successive layer using the output from the previous one to inform its results. Deep learning models can become more and more accurate as they process more data, essentially learning from previous results to refine their ability to make correlations and connections.

Deep learning models handle and process huge volumes of complex data through multi-layered analytics to provide fast, accurate, and actionable results or insights. When applied to the scenario we mentioned beforehand, deep learning filters through that multitude of patient data and prioritizes those who need remedial care the most.

You can also align its findings to effectively identify individuals who will not only return monetary value to your healthcare brand, but at the same time are most likely to “engage” or participate in programs offered by your company, such as wellness, diet, fitness or exercise. They can also be the best people to commit to avoiding poor lifestyle choices, such as overeating, smoking, and alcohol, helping guarantee the success of the remediation program.

With a combination of three decades of market research experience and conscientious use of AI, Cascade Strategies has been helping healthcare organizations develop advanced models to handle, filter and identify the likeliest of candidates for their program purposes. Cascade Strategies helps industry professionals not only recognize their ideal customers but also reach out to them with some of the most effective and award-winning marketing campaigns, thanks to our array of services such as Brand Development Research and Segmentation Studies. To see more examples of how we help leading worldwide companies achieve their goals, please visit our website.

Here are some of our suggestions for further reading on deep learning and healthcare:

https://builtin.com/artificial-intelligence/machine-learning-healthcare

https://research.aimultiple.com/deep-learning-in-healthcare/

https://healthitanalytics.com/features/types-of-deep-learning-their-uses-in-healthcare

Aug

The Impact of AI

In The Age of AI, which Henry Kissinger co-wrote with Eric Schmidt and Daniel Huttenlocher, Kissinger tried to warn us that AI would eventually have the capability to come up with conclusions or decisions that no human is able to consider or understand. Put another way, self-learning AI would become capable of making decisions beyond what humans programmed into it and base such conclusions on what it deems the most logical approach, regardless of how negative or devastating the consequences can be.

A common example to illustrate this point is how AI had already transformed games of strategy like chess, where given the chance to learn the game for itself instead of using plays programmed into it by the best human chess masters, it executed moves that have never crossed the human mind. And when playing with other computers that were limited by human-based strategies, the self-learning AI proved dominant.

When applied to the field of warfare, this could possibly mean AI proposing or even executing the most inhumane of plans regardless of human disagreement simply because it considers such a decision the most logical step to take.

The Influence of AI

As part of Kissinger’s warning, it’s been noted just how far-reaching AI’s influence already is in modern life, especially with its usage in innocuous things such as social media algorithms, grammar checkers, and the much-hyped ChatGPT. With the growing dependency on AI, there runs the risk of human thinking being eclipsed by machine-based efficiency and effectiveness. And how it arrives at such efficient and effective decisions becomes questionable because it could become difficult or near impossible to trace what it has learned along the way.

Just imagine someone making a decision influenced by the information fed to them by AI and yet failing to rationalize the thinking behind such a decision. That particular human may not realize it, but at that point they’re living in an AI world, where human decision-making is imitating machine decision-making rather than the reverse. It was this interchangeability Alan Turing was referring to with his famous postulate about artificial intelligence — the so-called “Turing Test” — which holds that you haven’t reached anything that can be fairly called AI until you can’t tell the difference.

Copyright Pavel Danilyuk

Appropriate Use of AI

However, it’s been pointed out that the book doesn’t follow “AI fatalism,” a common belief wherein AI is inevitable and humans are powerless to affect this inevitability. The authors wrote that we are still capable of controlling and shaping AI with our human values, its “appropriate use” as we at Cascade Strategies have been advocating for quite some time. We have the opportunity to limit or restrain what AI learns or align its decision-making with human values.

Kissinger had sounded the warning while others had already made calls to start limiting AI’s capabilities. We are hopeful that in the coming years, with the best modern thinkers and tech experts at the forefront, we progress to more of an AI-assisted world where human agency remains paramount instead of an AI-dominated world where inscrutable decisions are left up to the machines.

Jul

What To Make Of ChatGPT’s User Growth Decline

jerry9789 0 comments artificial intelligence, Burning Questions, Uncategorized

The Beginning Of The End?

More than six months after launching on November 2022, ChatGPT recorded its first decline in user growth and traffic in June 2023. Spiceworks reported that the Washington Post surmised quality issues and summer breaks from schools could have been factors in the decline, aside from multiple companies banning employees from using ChatGPT professionally.

Brad Rudisail, another Spiceworks writer, opined that a subset of curious visitors driven by the hype over ChatGPT could’ve also boosted the numbers of early visits, the dwindling user growth resulting from the said group moving on to the next talk of the town.

The same article also brings up open-source AI gaining ground on OpenAI’s territory as a possible factor, thanks to customizable, faster, and more useful models on top of being more transparent and the decreased likelihood of cognitive biases.

Don’t Buy Into The Hype

But perhaps the best takeaway is Mr. Rudisail’s point that we’re still in the early stages of AI and it’s premature to herald ChatGPT’s downfall with a weak signal like decreased user growth. For all we know, this is what could be considered normal numbers, with earlier figures inflated by the excitement surrounding its launch. Don’t buy into the hype is a position we at Cascade Strategies advocate when it comes to matters of AI.

The advent of AI has taken productivity and efficiency to levels never seen before, so the initial hoopla over it is understandable. However, we believe people are now starting to become a little more settled in their appraisal of AI. They’re starting to see that AI is pretty good at “middle functions” requiring intelligence, whether that be human or machine-based. But when it comes to “higher function” tasks which involve discernment, abstraction and creativity, AI output falls short of excellence. Sometimes mediocrity is acceptable, but for most pursuits excellence is needed.

Excellence Achieved Through High Level Human Thinking

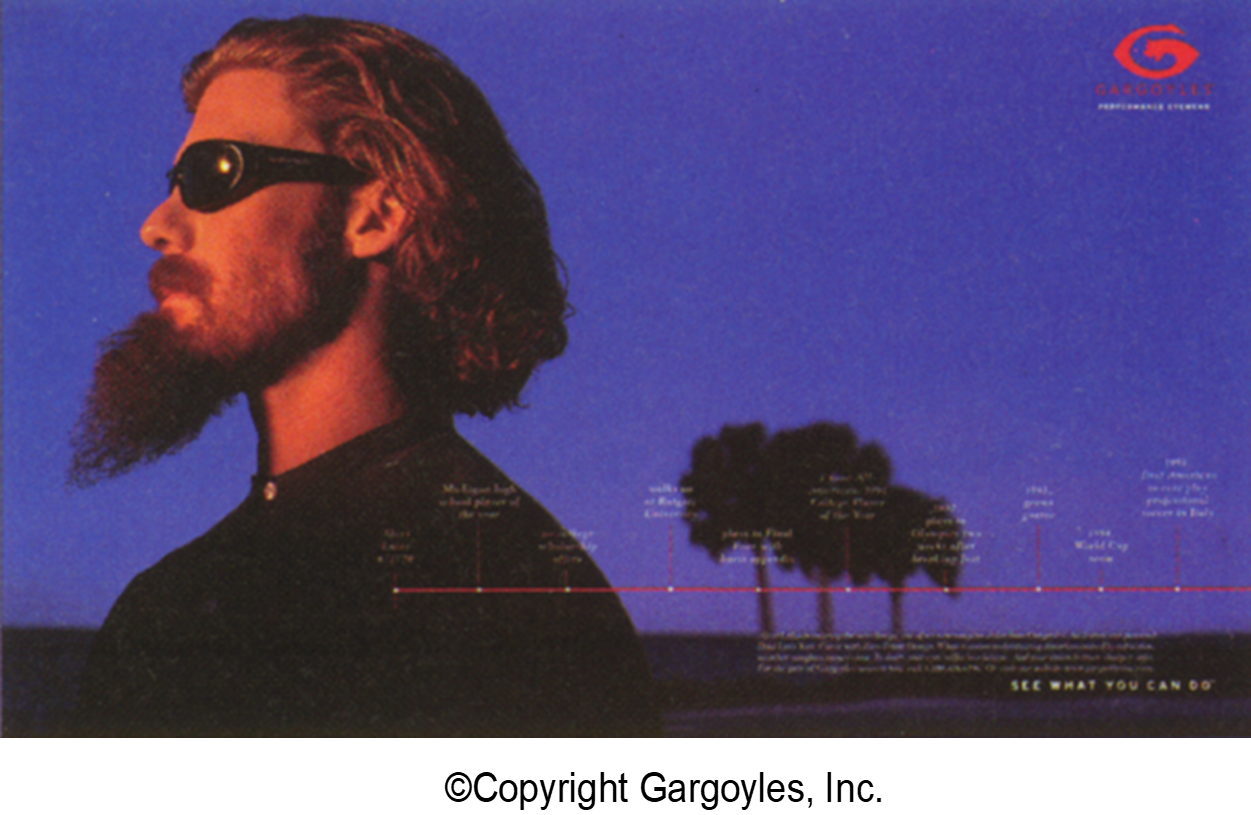

To illustrate just how AI would come out lacking in certain activities, let’s consider our case study for the Gargoyles brand of sunglasses. ChatGPT can produce a large number of ads for sunglasses at little or no cost, but most of those ads won’t bring anything new to the table or resonate with the audience.

However, when researchers spent time with the most loyal customers of Gargoyles to come up with a new ad, they discovered a commonality that AI simply did not have the power to discern. They found a unique quality of indomitability among these brand loyalists: many of them had been struck down somewhere in their upward striving, and they found the strength and resolve to keep going while the odds were clearly against them. They kept going and prevailed. The researchers were tireless in their pursuit of this rare trait, and they stretched the interpretive, intuitive, and synthesis-building capacities of their right brains to find it. Stretching further, they inspired creative teams to produce the award-winning “storyline of life” campaign for the Gargoyles brand.

All told, this is a story of seeking excellence, where hard-working humans press the ordinary capacities of their intellects to higher layers of understanding of a subject matter, not settling for simply a summarization of the aggregate human experience on the topic. This is what excellence is all about, and AI is not prepared to do it. To achieve it, humans have to have a strong desire to go beyond the mediocre. They have to believe that stretching their brains to this level results in something good.

How To Make “Appropriate Use” of AI

But that is not to say that AI and high level human thinking can’t mix. The key is to recognize where AI would best fit in your process and methodologies, then decide where human intervention comes in. This is what we call “Appropriate Use” of AI.

Take for example our case study for Expedia Group and how they engage with millions of hospitality partners. Expedia offers their partner “advice” which helps them receive a booking over their competitors. With thousands of pieces of advice to give their partners, they utilize AI to filter through all those recommendations and present only the best ones to optimize revenue. Cascade Strategies has helped them further by creating a tool called Scenario Analyzer, which uses the underlying AI model to automate the selection of these most revenue-optimal pieces of advice.

Either way, the end decision on which advice to go with (or whether they accept any advice at all) ultimately still comes from Expedia’s partner, not the AI.

Copyright ClaudeAI.uk

A Double-edged Sword

As you can see with ChatGPT, it’s easy to get carried away with all the hype surrounding AI. At launch, it was acclaimed for the exciting possibilities it represented, but now that it has hit a bump in the road, some people and outlets act as if ChatGPT is on its last leg. Hype is good when it’s necessary to draw attention; unfortunately in most cases, it sets up the loftiest of expectations when good sense gets overridden.

This is why we think a sensible mindset is the best way to approach and think about AI — to see it for what it really is. It’s a tool for increasing productivity and efficiency, not the end-all and be-all, as there is still much room for excellent human thinking backed by experience and values to come into play. Our concerns for now may not be as profound and dire as those expressed by James Cameron, Elon Musk, Steve Wozniak and others, but we’d like to believe that “appropriate use” of AI is the key towards better understanding and responsible stewardship of this emerging new technology.