Dec

What’s Going On With Consumer Startups In The Age of AI?

jerry9789 0 comments artificial intelligence, Brandview World, Burning Questions

Enterprise Over Consumer

The dawn of the Internet era witnessed the emergence of huge consumer companies like Amazon while the advent of mobile technology had Uber and the like on the forefront. However, it appears that the tide has changed in this new age of AI with startup founders and investors appearing to favor enterprise over consumer efforts.

This observation is the school of thought on which the PitchBook article “Where are all the consumer AI startups—and why aren’t VCs funding them?” was based and written. It came from the author’s takeaway from her two-day experience attending the recent startup conference Slush in Helsinki where venture capitalists expressed high interests in AI startups as expected, but notably for B2B over B2C.

She further adds that PitchBook data has venture funding for B2B AI startups is at $16.4 billion this year while B2C is only at $7.8 billion. But with the consumer AI market estimated to be doubly larger than its enterprise counterpart by 2032, she posts the question if there is a lack of B2C startups, or if VC are simply just not funding consumer AI companies?

Copyright: fauxels

The Challenges of B2C AI

To start with, it simply seems that investors generally are not keen on consumer startups especially with the VC downturn starting in 2022. A combination of factors such as rising inflation, higher interest rates and valuation markdowns have created a harsh macroeconomic climate for B2C AI to thrive. And when stable profitability is the bottom line, investors would understandably be more attractive to the steady and predictable revenues generated by B2B AI companies over the unsustainable and erratic B2C AI business models.

Jordan Steiner, CEO and developer capital/chief strategy officer at Monadical, shared some unfavorable characteristics he noticed from B2C AI companies he noticed on a LinkedIn post. Most B2C AI ideas these days he found are easily replicable. When competitors can not only easily clone but also improve on an existing idea, this can hamstring any company’s chances from dominating the space or becoming an incumbent. And when these factors create a cycle where users chase the newest cool product and churn when the novelty wears off, it illustrates just how unsustainable B2C AI business models are, especially in this period of time when user acquisition costs are higher.

And when a business model banks more on desirability instead of addressing pain points, there is a continuous struggle to iterate and produce new features or content. This then requires a consistent and ongoing understanding of consumer trends, necessitating access to consumer data and insights that a startup might not have at the beginning and need to build over time, primarily with user acquisition. Incumbent B2C companies would most likely have heavily invested on acquiring consumer data and insights to maintain and defend their longstanding piece of the market.

So why do B2B AI investments seem the more attractive prospects then at this time? By prioritizing pain points over desirability, then selling to and maintaining long-term relationships with key industry players, B2B AI companies are able to eventually build desirability to attract more clients. B2B clients are also more likely to sign up and keep multi-year contracts and subscriptions which not only provide steady and stable revenue but also client data vital for product improvement and customization, helping not only build brand loyalty but also incumbency and low churn.

Copyright: Christina Morillo

Can A B2C AI Company Succeed?

Despite the aforementioned obstacles, there is room for a consumer AI startup to thrive. The PitchBook article suggests focusing “other spaces where big tech has less credibility, such as mental health solutions.” In the same article, Point72 Ventures managing partner Sri Chandrasekar highlights differentiation as being a key characteristic for a B2C AI company to help close investments, this uniqueness holding off attempts to be replicated while tapping into that factor of desirability that excites and engages consumers while attracting investors.

If anything else, a consumer AI startup might need to bootstrap it more than just having an idea to attract investments. Demonstrating and executing on your unique position not only proves your idea as sound and feasible but you are able to get your B2C AI company past the first step towards progressing to the potentially higher rewards offered in this space.

Featured Image Copyright: Pavel Danilyuk

Top Image Copyright: Photo By Kaboompics.com/Karolina Grabowska

Nov

“Humanizing” Market Research with AI

jerry9789 0 comments artificial intelligence, Brand Surveys and Testing, Burning Questions

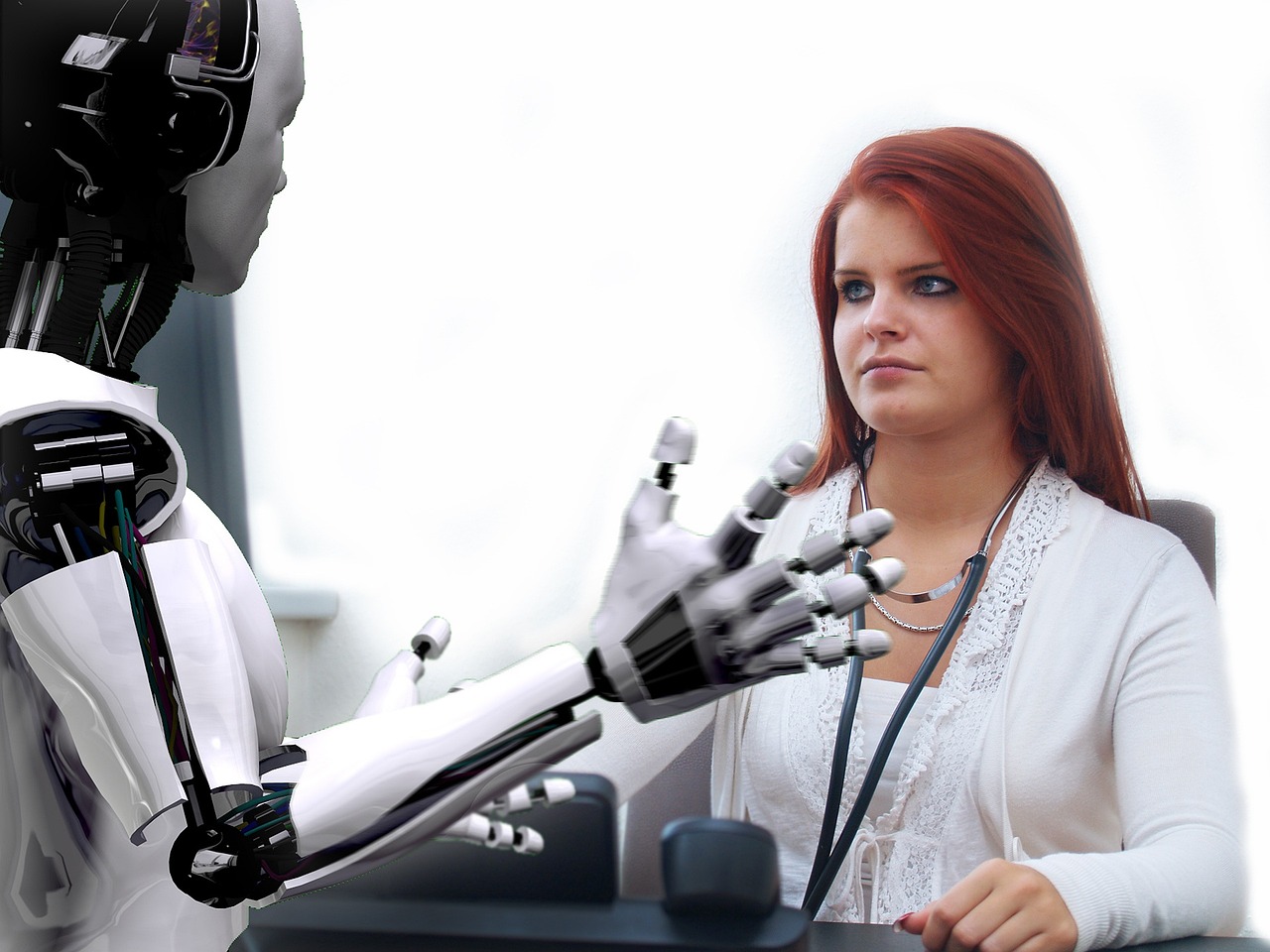

The Boon and Bane of AI

The increasing and widespread utilization and demand for Artificial Intelligence have been met with both excitement and reservation. Excitement for the possibilities AI’s implementation unlocks, oftentimes steps ahead of the curve or beyond expectations; reservations not only stemming from the risks over its unethical and unchecked use, but also the ramifications for human involvement now that intelligent machines represent an optimized and economical choice for completing tasks and processes. But can there be a middle ground somewhere where AI and human engagement coexist and collaborate?

The “Humanization” of Market Research

“Capturing the Human Element in an Artificial World” by Eric Tayce (Quirk’s Marketing Research Review, Sep-Oct 2024) posits that such a midground is possible, especially in market research. An industry that’s all aware of its excessive dependence on technology to necessitate a push to “humanize” research data, it saw a dramatic shift from “data-intense tomes and clinical-sounding slide titles” to “streamlined, narrative-style reporting” focused on “the unique motivations and experiences that drive customer behaviors.” The latter “humanized” approach is able to communicate business goals while connecting and engaging on an emotional level. However, generative large language models (LLMs) cast a shadow on this “humanized” approach by offering synthetic outputs and progressive algorithms.

But combining both AI and efforts to “humanize” research can result in the whole being greater than the sum of its parts. The article shared that AI can help collect more unstructured data from survey research by employing conversational chatbots to create a natural, richer experience for the respondent. That unstructured data in turn can potentially provide more organic, more human insights with AI-powered algorithms, an undertaking that was once considered too complex or time-consuming. AI can also build multifaceted perspectives through context by linking survey records with a broad range of data sources. And in lieu of traditional static deliverables, data and insights can be presented in a vibrant and interactive narrative by an AI-powered persona.

The “Human” Element

All these interesting prospects can only be achieved when AI is tempered by high-quality human input and thoughtful implementation considerate of ethical and moral implications. Aside from AI mistakes and hallucinations existing, AI has been observed to be too helpful and excitable. Human oversight and input remain key in ensuring AI models are trained, fine-tuned and grounded with quality and relevant datasets while having enough flexibility to engage appropriately in open-ended interactions.

There’s no denying just how transformative AI is in reshaping industries today, including market research. Despite concerns of machines taking over jobs, one can look at it with the perspective of roles changing and adapting. AI with its generative and synthetic capabilities can elevate the “humanization” of market research, but to get to that point we simply can’t forget that humans are indispensable to the whole process.

Featured Image Copyright: GrumpyBeere

Top Image Copyright: Darlene Anderson

Jul

AI In Market Research: The Story So Far – Chapter 3: A Glimpse Into A Future with AI

jerry9789 0 comments artificial intelligence, Burning Questions

This is the third installment in our series on AI webinars. The inspiration for this series is a simple question about what these AI seminars are saying. There are hundreds of these seminars floating about, all based on the premise that AI technology is here to stay, people are curious about it, and they want to know how it will affect their lives.

We asked one of our staff members to attend several of these AI webinars in pursuit of the answer to this question: what are the AI webinars really saying? What are the common themes, if any? While the first and second chapters focused on AI’s ubiquity and limitations respectively, this third installment focuses on the replacement of humans and human work.

Will Jobs Be Lost Because of AI?

AI is a threat to most jobs including those in the market research industry but this is most especially true with any repetitive or routine work grounded by established knowledge or processes. AI provides the advantages of streamlining your knowledge base and shortcutting processes. If you’re on the process side and you fail to embrace AI, clients might find you costlier and less optimized.

The market research industry had already learned this lesson in the early 2000s when the big companies didn’t take online research seriously. They subsequently found themselves trying to catch up some years later after the widespread acceptance and adoption of online research. Whoever waits too long or neglects to embrace the newest tool would most likely fall behind as the industry shifts towards AI-driven processes.

What are the AI webinars saying about Human Replacement?

This doesn’t necessarily mean that humans would be fully replaced and displaced by AI in market research. AI is, after all, a tool. All tools revolutionize optimization but optimization by definition doesn’t make things better. AI will revolutionize things, but it is not the big revolution that will make everything different.

To illustrate that last point, a question was raised in one of the webinars about the possibility of a data collection tool that can replace surveys. There wasn’t a definitive answer given by the panelists since it’s more of a question of what surveys would actually look like. It would depend on what is wanted to be accomplished, the type of information sought, and how they would engage and elicit reactions.

The subject of AI-powered market research alone attracts investors. Embracing AI would not only optimize your market research processes but it would also add value to your insights. Having said that, AI places everybody on the same playing field, except those who recognize and seize the opportunity to experiment with AI are able to gain an edge. The key would be to build on experience rather than purely on the thirst for innovation; try to be at the front of things, but don’t try to be the first one. Try to find a good balance by going with the flow while making smart moves and decisions.

It’s been noted that the profile of the researcher of the future is a little bit more techy and into IT integration. New business intelligence leaders today have IT backgrounds, and this is different from two decades ago. Even in a world with AI-based market research, there would be room for the human factor that adds value from experience — something AI won’t be able to replace.

Jun

AI In Market Research: The Story So Far – Chapter 2: Limitations of AI

jerry9789 0 comments artificial intelligence, Burning Questions

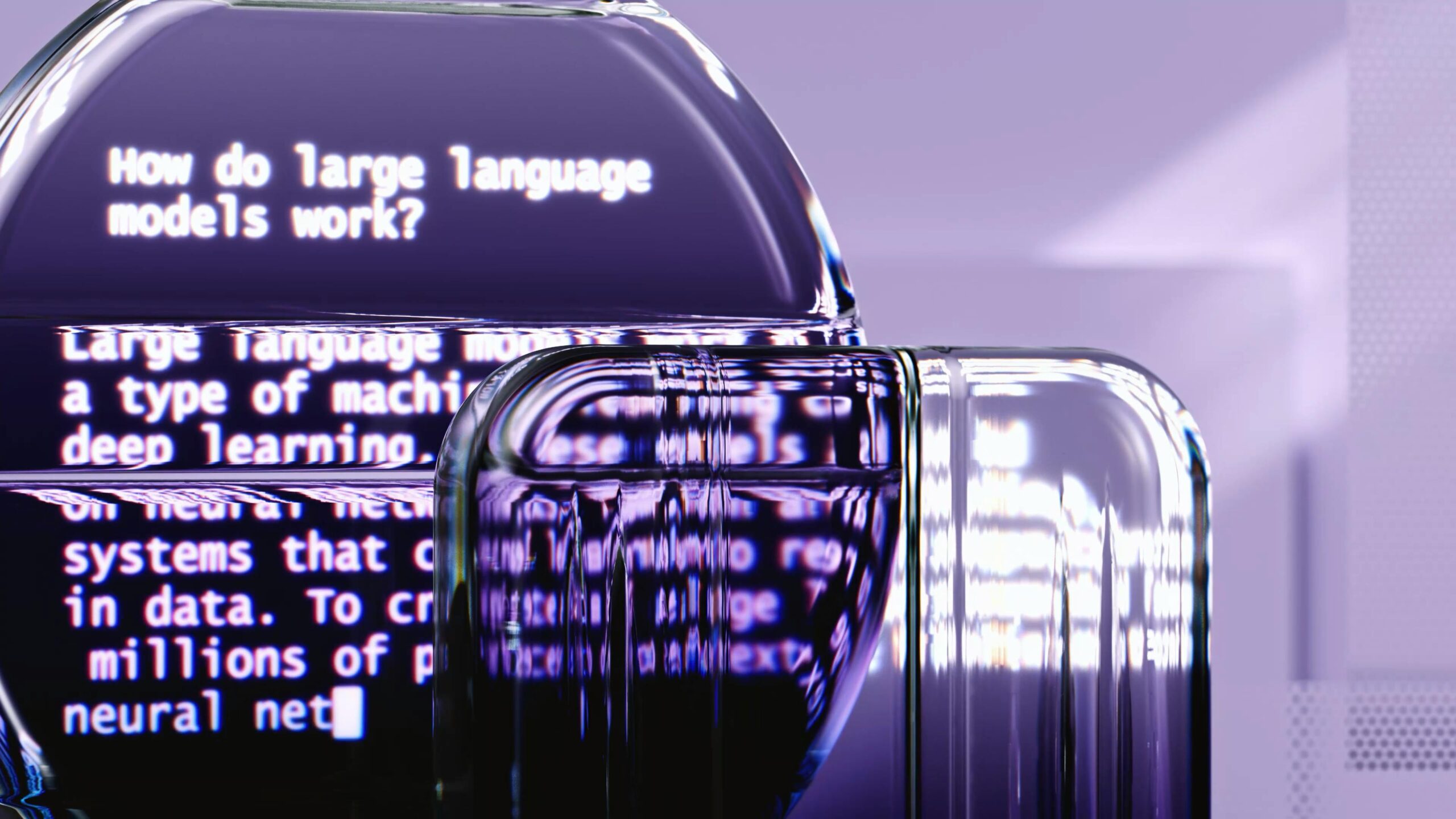

Despite AI’s expanding popularity in market research, experts are fully aware that there is still a lot of ground to cover regarding their effectiveness and optimization for use cases, along with understanding and mitigating their risks and limitations.

These limitations reveal themselves most especially in efforts to replicate human behavior. One research paper on a survey employing Large Language Models observed how effective these LLMs are in understanding consumer preferences with their behaviors consistent with four economic theories, but noted that there were demonstrations of extra sensitivity to the prompts they were given. In addition, there were indications of positional bias wherein the first concept was selected more often than the others that were also presented.

AI has also been found to be too optimistic, tech-forward, and self-interested. For example, ChatGPT is inherently focusing on maximizing expected payoffs, whereas a person would often act in a risk-averse way for gains and risk-seeking for losses. AI also exhibits a generally higher level of brand association than humans, resulting in higher brand scores. However, it struggles with lesser-known topics, notably in scenarios where new commercial products are tested and targeted toward a specific audience.

While it can be addressed by cautious prompt engineering, AI hallucinations are an unintended effect of the helpful aspect of these models where they generate unnecessary output stemming from patterns or elements they perceived but are nonexistent or imperceptible to human observers.

And while more on the side of risks than limitations, there is an understandably and famously increasing concern from artists over how text-to-image generators threaten to replace them and their work, just as there are certain roles in the market research sector that are in danger of being taken over by AI.

Perhaps the ideal recommendation for utilizing AI while keeping in mind its limitations is to use it in cases where it’s most effective and productive with the understanding that it might excel in one scenario, but it doesn’t mean it will be just as effective in another situation.

May

AI In Market Research: The Story So Far – Chapter 1: Adapt or Get Left Behind

jerry9789 0 comments artificial intelligence, Burning Questions

Whether you like it or not, AI is here to stay. Yes, AI is a threat to most jobs, including those in the market research industry, since it shortcuts processes while optimizing operational efficiency. While market research technology didn’t develop as fast as other industries in the early to mid-2000s, the advent and subsequent mainstream appeal of AI has forced market research to get with the times. You’re in trouble if you fail to embrace it but if you do, you get to be on the winning side.

Experts expressed that we’re still in the early exploratory stages of AI but there is already depth in its application in market research. Take, for example, the humanization of surveys. An interactive and dynamically probing AI improved overall data quality in more than one experiment due to an increased engagement from respondents resulting from a sense of appreciation over the perceived but simulated attention paid to them and their responses during the survey. In the same vein, employing a conversational AI voice has been shown to dramatically drive engagement for better data.

That latter effort to humanize surveys has created an influx of voice responses and content, leading to the new question of what we should now do with all those resources, which would be a byproduct of AI-based solutions. Of course, LLMs and other existing AI models would be employed to help find the answer to this question.

Aside from solving dark data, AI has also displayed impressive capabilities to answer choice tasks, especially performing well with well-known topics and products, even outdoing humans in some surveys where humans get confused or find it hard to render a judgment. It’s also been considered for AI to adapt existing survey data for a new topic to save time. AI’s role in market research might still be experimental at this point, but it has grown to the point where it’s being utilized and adapted to take on one challenge after another.

Our second entry in this four-part blog series highlights some of AI’s risks and limitations, and how understanding and mitigating these factors can lead to their effective and optimal utilization.

May

AI Webinars Are Everywhere – What Are They Really Saying?

jerry9789 0 comments artificial intelligence, Burning Questions

With AI becoming more ubiquitous each passing year, it’s no surprise that webinars dedicated to the subject have been springing up everywhere. Amid the hype, people are either curious, interested, or to some degree invested in what AI’s increasing popularity means for them as well as the industries they’re part of. These webinars serve as the perfect platform for industry experts to share their experiences, thoughts, and opinions on AI’s current and future implications.

What are these AI webinars really saying? We sent one of our staff members in search of the answers. Each webinar talks about the impact of AI on the economy, society, and culture, but they must share some common themes or overarching ideas. What are these common ideas? To get at the answers, we asked our staff member (Emil Deverala) to focus on the impacts on an industry we truly understand: market research.

During April and May 2024, Emil attended three AI webinars: “Market Research in an AI World,” “AI in Marketing Research: Expert Panel Discussion,” and “Building New Business: Five Ways Firms Are Driving New Revenue with Automation And AI.” After each webinar, Emil was asked to not only summarize the items that were discussed, but also share his larger thoughts about what the webinar was really trying to say to the world about what we can expect from AI.

Emil eventually boiled things down to four main ideas or themes. In this series of blogs, we’ll be exploring each of those themes. Here’s the first in our series. It focuses on why people in the market research industry need to pay attention to AI in the first place.

Sep

What It Means to Choose or Decide In The Age of AI

jerry9789 0 comments artificial intelligence, Burning Questions

Longstanding Concerns Over AI

From an open letter endorsed by tech leaders like Elon Musk and Steve Wozniak which proposed a six-month pause on AI development to Henry Kissinger co-writing a book on the pitfalls of unchecked, self-learning machines, it may come as no surprise that AI’s mainstream rise comes with its own share of caution and warnings. But these worries didn’t pop up with the sudden popularity of AI apps like ChatGPT; rather, concerns over AI’s influence have existed decades long before, expressed even by one of its early researchers, Joseph Weizenbaum.

ELIZA

In his book Computer Power and Human Reason: From Judgment to Calculation (1976), Weizenbaum recounted how he gradually transitioned from exalting the advancement of computer technology to a cautionary, philosophical outlook on machines imitating human behavior. As encapsulated in a 1996 review of his book by Amy Stout, Weizenbaum created a natural-language processing system he called ELIZA which is capable of conversing in a human-like fashion. When ELIZA began to be considered by psychiatrists for human therapy and his own secretary interacted with it too personally for Weizenbaum’s comfort, it led him to start pondering philosophically on what would be lost when aspects of humanity are compromised for production and efficiency.

Copyright chenspec (Pixabay)

The Importance of Human Intelligence

Weizenbaum posits that human intelligence can’t be simply measured nor can it be restricted by rationality. Human intelligence isn’t just scientific as it is also artistic and creative. He remarked with the following on what a monopoly of scientific approach would stand for, “We can count, but we are rapidly forgetting how to say what is worth counting and why.”

Weizenbaum’s ambivalence towards computer technology is further supported by the distinction he made between deciding and choosing; a computer can make decisions based on its calculation and programming but it can not ultimately choose since that requires judgment which is capable of factoring in emotions, values, and experience. Choice fundamentally is a human quality. Thus, we shouldn’t leave the most important decisions to be made for us by machines but rather, resolve matters from a perspective of choice and human understanding.

AI and Human Intelligence in Market Research

In the field of market research, AI is being utilized to analyze a multitude of data to produce accurate and actionable results or insights. One such example is deep learning models which, as Health IT Analytics explains, filter data through a cascade of multiple layers. Each successive layer improves its result by using or “learning” from the output of the previous one. This means the more data deep learning models process, the more accurate the results they provide thanks to the continuing refinement of their ability to correlate and connect information.

While you can depend on the accuracy of AI-generated results, Cascade Strategies takes it one step further by applying a high level of human thinking. This allows Cascade Strategies to interpret and unravel insights a machine would’ve otherwise missed because it can only decide, not choose.

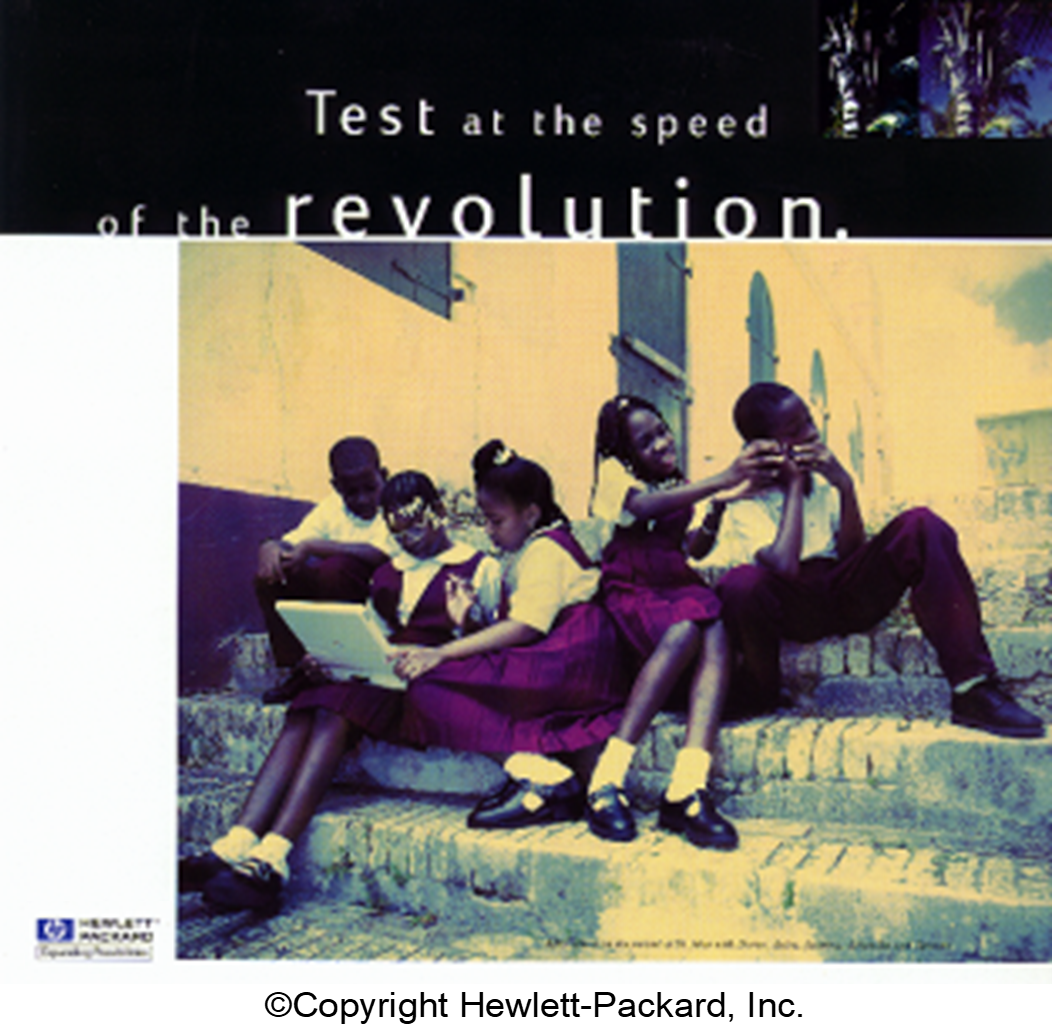

Take a look at the market research project we performed for HP to help create a new marketing campaign. As part of our efforts, we chose to employ very perceptive researchers to spend time with worldwide HP engineers as well as engineers from other companies.

This resulted in our researchers discovering that HP engineers showed greater qualities of “mentorship” than other engineers. Yes, conducting their own technical work was important but just as significant for them was the opportunity to impart to others, especially younger people, what they were doing and why what they were doing was important. This deeper level of understanding led the way for a different approach to expressing the meaning of the HP brand for people and ultimately resulted in the award-winning and profitable “Mentor” campaign.

If you’re tired of the hype about AI-generated market research results and would like more thoughtful and original solutions for your brand, choose the high level of intuitive, interpretive, and synthesis-building thinking Cascade Strategies brings to the table. Please visit https://cascadestrategies.com/ to learn more about Cascade Strategies and more examples of our better thinking for clients.

Aug

The Future Is Here

“Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.”

With just 22 words, we are ushered into a future once heralded in science fiction movies and literature of the past, a future our collective consciousness anticipated but has now taken us by surprise upon the realization of our unreadiness. It is a future where machines are intelligent enough to replicate a growing number of significant and specialized tasks. A future where machines are intelligent enough to not only threaten to replace the human workforce but humanity itself.

Published by the San Francisco-based Center for AI Safety, this 22-word statement was co-signed by leading tech figures such as Google DeepMind CEO Demis Hassabis and OpenAI CEO Sam Altman. Both have also expressed calls for caution before, joining the ranks of other tech specialists and executives like Elon Musk and Steve Wozniak.

Earlier in the year, Musk, Wozniak, and other tech leaders and experts endorsed an open letter proposing a six-month halt on AI research and development. The suggested pause is presumed to allow for time to determine and implement AI safety standards and protocols.

Max Tegmark, physicist and AI researcher at the Massachusetts Institute of Technology and co-founder of the Future of Life Institute, once held an optimistic view of the possibilities granted by AI but has now recently issued a warning. He remarked that humanity is failing this new technology’s challenge by rushing into the development and release of AI systems that aren’t fully understood or completely regulated.

Henry Kissinger himself co-wrote a book on the topic. In The Age of AI, Kissinger warned us about AI eventually becoming capable of making conclusions and decisions no human is able to consider or understand. This is a notion made more unsettling when taken into the context of everyday life and warfare.

Working With AI

We at Cascade Strategies wholeheartedly agree with this now emerging consensus and additionally, we believe that we’ve been obedient in upholding the responsible and conscientious use of AI. Not only have we long been advocating for the “Appropriate Use” of AI, but we’ve also made it a hallmark of how we find solutions for our client’s needs with market research and brand management.

Just consider the work we’ve done with the Expedia Group. For years, they’ve utilized a segmentation model to engage with their lodging partners by offering advice that could lead to the partner winning a booking over a competitor. AI filters through the thousands of possible recommendations to arrive at a shortlist of the best selections optimized for revenue.

With the continued growth and diversification of their partners, they then needed a more effective approach in engaging and appealing to them, something that focuses more on that associate’s behavior and motivations. We came up with two things for Expedia: a psychographic segmentation formed into subgroups based on patterns of thinking, feeling, and perceiving to explain and predict behavior, and more importantly, a Scenario Analyzer that utilizes the underlying AI model but now delivers recommendations in very action-oriented and compelling messaging tailor-fit for that specific partner.

The best part about the Scenario Analyzer is whether the partner follows any of the advice recommended or does nothing, Expedia still stands to make a profit while maintaining an image of personalized attentiveness to their partner’s needs. And ultimately, it’s the partner who gets to decide, not the AI.

Copyright Tara Winstead

Our Future With AI

This is how we view and approach AI- it’s not the end-all, be-all solution but rather an essential tool in increasing productivity and efficiency in tandem with excellent human thinking, judgment, and creativity. Yes, it is going to be part of our future but in line with the new consensus, we believe that AI shaped by human values and experience is the way to go with this emerging and exciting technology.

Aug

The Impact of AI

In The Age of AI, which Henry Kissinger co-wrote with Eric Schmidt and Daniel Huttenlocher, Kissinger tried to warn us that AI would eventually have the capability to come up with conclusions or decisions that no human is able to consider or understand. Put another way, self-learning AI would become capable of making decisions beyond what humans programmed into it and base such conclusions on what it deems the most logical approach, regardless of how negative or devastating the consequences can be.

A common example to illustrate this point is how AI had already transformed games of strategy like chess, where given the chance to learn the game for itself instead of using plays programmed into it by the best human chess masters, it executed moves that have never crossed the human mind. And when playing with other computers that were limited by human-based strategies, the self-learning AI proved dominant.

When applied to the field of warfare, this could possibly mean AI proposing or even executing the most inhumane of plans regardless of human disagreement simply because it considers such a decision the most logical step to take.

The Influence of AI

As part of Kissinger’s warning, it’s been noted just how far-reaching AI’s influence already is in modern life, especially with its usage in innocuous things such as social media algorithms, grammar checkers, and the much-hyped ChatGPT. With the growing dependency on AI, there runs the risk of human thinking being eclipsed by machine-based efficiency and effectiveness. And how it arrives at such efficient and effective decisions becomes questionable because it could become difficult or near impossible to trace what it has learned along the way.

Just imagine someone making a decision influenced by the information fed to them by AI and yet failing to rationalize the thinking behind such a decision. That particular human may not realize it, but at that point they’re living in an AI world, where human decision-making is imitating machine decision-making rather than the reverse. It was this interchangeability Alan Turing was referring to with his famous postulate about artificial intelligence — the so-called “Turing Test” — which holds that you haven’t reached anything that can be fairly called AI until you can’t tell the difference.

Copyright Pavel Danilyuk

Appropriate Use of AI

However, it’s been pointed out that the book doesn’t follow “AI fatalism,” a common belief wherein AI is inevitable and humans are powerless to affect this inevitability. The authors wrote that we are still capable of controlling and shaping AI with our human values, its “appropriate use” as we at Cascade Strategies have been advocating for quite some time. We have the opportunity to limit or restrain what AI learns or align its decision-making with human values.

Kissinger had sounded the warning while others had already made calls to start limiting AI’s capabilities. We are hopeful that in the coming years, with the best modern thinkers and tech experts at the forefront, we progress to more of an AI-assisted world where human agency remains paramount instead of an AI-dominated world where inscrutable decisions are left up to the machines.

Jul

What To Make Of ChatGPT’s User Growth Decline

jerry9789 0 comments artificial intelligence, Burning Questions, Uncategorized

The Beginning Of The End?

More than six months after launching on November 2022, ChatGPT recorded its first decline in user growth and traffic in June 2023. Spiceworks reported that the Washington Post surmised quality issues and summer breaks from schools could have been factors in the decline, aside from multiple companies banning employees from using ChatGPT professionally.

Brad Rudisail, another Spiceworks writer, opined that a subset of curious visitors driven by the hype over ChatGPT could’ve also boosted the numbers of early visits, the dwindling user growth resulting from the said group moving on to the next talk of the town.

The same article also brings up open-source AI gaining ground on OpenAI’s territory as a possible factor, thanks to customizable, faster, and more useful models on top of being more transparent and the decreased likelihood of cognitive biases.

Don’t Buy Into The Hype

But perhaps the best takeaway is Mr. Rudisail’s point that we’re still in the early stages of AI and it’s premature to herald ChatGPT’s downfall with a weak signal like decreased user growth. For all we know, this is what could be considered normal numbers, with earlier figures inflated by the excitement surrounding its launch. Don’t buy into the hype is a position we at Cascade Strategies advocate when it comes to matters of AI.

The advent of AI has taken productivity and efficiency to levels never seen before, so the initial hoopla over it is understandable. However, we believe people are now starting to become a little more settled in their appraisal of AI. They’re starting to see that AI is pretty good at “middle functions” requiring intelligence, whether that be human or machine-based. But when it comes to “higher function” tasks which involve discernment, abstraction and creativity, AI output falls short of excellence. Sometimes mediocrity is acceptable, but for most pursuits excellence is needed.

Excellence Achieved Through High Level Human Thinking

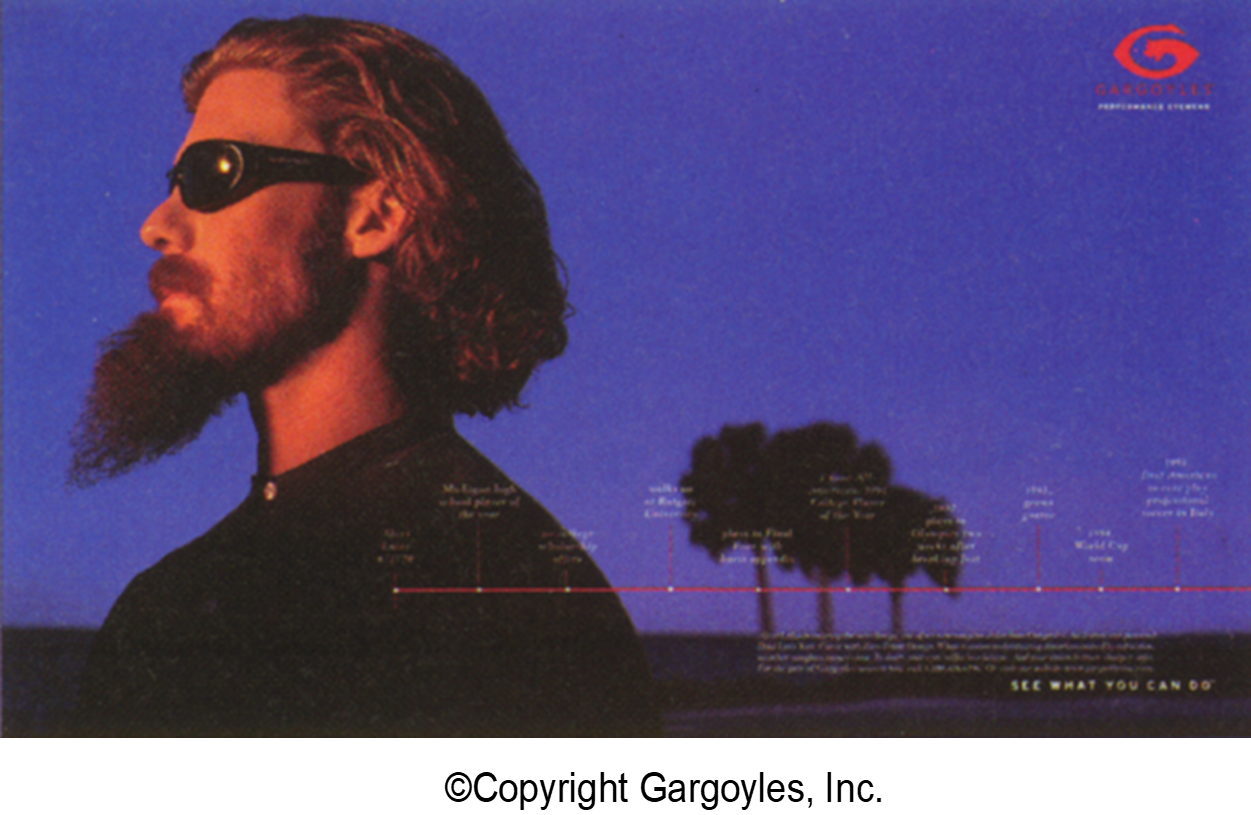

To illustrate just how AI would come out lacking in certain activities, let’s consider our case study for the Gargoyles brand of sunglasses. ChatGPT can produce a large number of ads for sunglasses at little or no cost, but most of those ads won’t bring anything new to the table or resonate with the audience.

However, when researchers spent time with the most loyal customers of Gargoyles to come up with a new ad, they discovered a commonality that AI simply did not have the power to discern. They found a unique quality of indomitability among these brand loyalists: many of them had been struck down somewhere in their upward striving, and they found the strength and resolve to keep going while the odds were clearly against them. They kept going and prevailed. The researchers were tireless in their pursuit of this rare trait, and they stretched the interpretive, intuitive, and synthesis-building capacities of their right brains to find it. Stretching further, they inspired creative teams to produce the award-winning “storyline of life” campaign for the Gargoyles brand.

All told, this is a story of seeking excellence, where hard-working humans press the ordinary capacities of their intellects to higher layers of understanding of a subject matter, not settling for simply a summarization of the aggregate human experience on the topic. This is what excellence is all about, and AI is not prepared to do it. To achieve it, humans have to have a strong desire to go beyond the mediocre. They have to believe that stretching their brains to this level results in something good.

How To Make “Appropriate Use” of AI

But that is not to say that AI and high level human thinking can’t mix. The key is to recognize where AI would best fit in your process and methodologies, then decide where human intervention comes in. This is what we call “Appropriate Use” of AI.

Take for example our case study for Expedia Group and how they engage with millions of hospitality partners. Expedia offers their partner “advice” which helps them receive a booking over their competitors. With thousands of pieces of advice to give their partners, they utilize AI to filter through all those recommendations and present only the best ones to optimize revenue. Cascade Strategies has helped them further by creating a tool called Scenario Analyzer, which uses the underlying AI model to automate the selection of these most revenue-optimal pieces of advice.

Either way, the end decision on which advice to go with (or whether they accept any advice at all) ultimately still comes from Expedia’s partner, not the AI.

Copyright ClaudeAI.uk

A Double-edged Sword

As you can see with ChatGPT, it’s easy to get carried away with all the hype surrounding AI. At launch, it was acclaimed for the exciting possibilities it represented, but now that it has hit a bump in the road, some people and outlets act as if ChatGPT is on its last leg. Hype is good when it’s necessary to draw attention; unfortunately in most cases, it sets up the loftiest of expectations when good sense gets overridden.

This is why we think a sensible mindset is the best way to approach and think about AI — to see it for what it really is. It’s a tool for increasing productivity and efficiency, not the end-all and be-all, as there is still much room for excellent human thinking backed by experience and values to come into play. Our concerns for now may not be as profound and dire as those expressed by James Cameron, Elon Musk, Steve Wozniak and others, but we’d like to believe that “appropriate use” of AI is the key towards better understanding and responsible stewardship of this emerging new technology.