Viewing posts categorised under:

Burning Questions

26

Mar

Software Marketing Today

If you look all over the Internet, you’ll find a multitude of articles talking about the most effective strategies for marketing your software development company. Ranging from seven to fifteen different strategies, these articles feature proven techniques like email marketing, social media or content marketing, pay-per-click (PPC) advertising or paid ads, and the like. For our part, we boiled it down to five key strategies here.

But with how tough competition is in the software development industry, it won’t be any surprise if the thought of doing more regarding targeting new customers has crossed your mind. Most likely, software companies are using the same marketing strategies you’ll find online, so employing a different tactic would help your company stand out from the rest or dominate a particular niche. But how do you come up with a different game plan when the field of software marketing has apparently been mapped out with all that strategic information available out there?

Most software marketing strategies focus on targeting and resonating with the ideal customer, from identifying the most profit-optimal client to tailoring your messaging to appeal to that buyer persona. They talk about the variety of approaches you can take to capture the attention and engage with your target consumer. But what if aside from thinking about who your ideal customer is, you take your marketing one step further by coming up with the best possible user experience, from the time your target persona first hears about your company to the point that they’re not only your regular customer but also your company’s best advocate?

Copyright Karolina Grabowska

Focusing On the Customer Experience

Now you might be thinking that this either sounds like the same thing or even antithetical to the concept of optimizing your marketing efforts by recognizing your ideal customer. You will still be doing the same things you’ve done in identifying and reaching out to your target client, such as demographics, psychographics, even SEO, but with this approach you are not just looking outwards from the lens of company marketing but rather from the perspective of a customer looking for a solution to their problem.

With that thought, you start off with the question of “exactly what problem was my software meant to solve and how does it solve it?” Are you able to deliver that idea in words that are easily understood by your ideal customer? Are you targeting the end-user or is there a decision-maker involved? If there are other similar companies offering the same product, how do you set yourself apart with what you can do differently from the vantage point of a prospective client? Can you encapsulate all that if all you have is one tagline or even one image to catch consumer attention? If it does catch attention, what would hook your best prospect into learning more about the software? As you can tell, some if not most of these questions also serve as a good foundation for the branding of your software product.

Copyright Fauxels (Pexels)

Local Presence Focus

You can also consider looking locally to see if the solution your software is offering is relevant to your community. If everything falls into place, not only will you enjoy the benefit of a consumer base right at home but more often than not, local communities have proven to strongly appreciate and support homegrown talent. This in turn could lead to an optimized and visible Google Business profile in search results and Google Maps. Just imagine how a local consumer feels discovering that a company offering a solution to their problem is found right in their own neighborhood.

Digital marketing is all the rage nowadays but exploring your local market also opens up the opportunity to try traditional marketing such as flyers and mailers. You can also even try hosting events or partnering with local companies, which would be a good way to practice and gain experience especially if you have plans to expand further not long after and would consider similar marketing activities and collaborations like influencer marketing and guest blogging.

Focusing locally is also a good place to start with SEO. Backed by keyword tools and planners to generate long-tail keywords, approaching SEO with the mindset of a local consumer with a specific objective in mind allows you to experiment more efficiently with a narrowed set of key phrases compared to what you had to work with had you jumped into a larger market base. Once you figure out the keywords that work best from your localized set, you can then riff off from these examples as you start expanding beyond your community.

Copyright MR-PANDA

Website Optimization and Multimedia Content

You can then optimize your website and its content with those keywords. Design your website with the added perspective of a customer visiting for the first time: navigating it should be intuitive with an engaging interface and interesting content. Make sure it’s mobile-friendly as well. If you don’t know it yet, search engines favor fast loading websites so regularly test your site speed especially whenever you make any changes or updates. Your Google Business profile might capture would-be clients’ attention, but it’s at your website where most of the action should take place, so be sure you have plenty of quality backlinks to it.

Whether your website is found through search results or a backlink, your first-time visitor would be expecting to find content elaborating on why your software is the right product for them. Aside from a blog section and other written elements, make sure to devote some space for informative multimedia materials like video tutorials or walkthroughs. Your written content can have all the information you would like to relay about your software and your company, but your visitor might not have all the time in the world to go through all those. Instead, they might stick around for a short but engaging and entertaining video.

Incorporate short animated infographics or explainers too into your website, thus sharing information about your software and your company. Vary your videos and animated graphics between informative and technical to fun and entertaining. This is also an opportunity to make your software stand out from other similar products or for your branding to express its uniqueness. You may also use the same multimedia content for your video or email marketing so there’s a sense of familiarity when your customer lands on your website for the first time.

Speaking of familiarity, you might also engage with your customer even more by providing free trial/limited time offers and Freemium subscriptions right from your website. These allow your customer to enjoy the basic features of your software and truly get a feel for what your product offers, perhaps leading them to opt for a Premium or higher-tier subscription for additional or complete access. If possible, you can offer a certain feature of your software to be available on your website; for example, a photo editor whose output could be downloadable either in a reduced but acceptable quality or with a watermark.

Making free trial/limited time offers and Freemium subscriptions available on your website also helps better position the pricing information of your software. Sure, you can include a pricing comparison chart between your software and its competitors, but firsthand experience of your product could prove to be the more convincing or deciding factor for your customer. Nevertheless, ensure that you are clear and transparent with the pricing for your software.

Copyright Andrea Piacquadio

Testimonials and Case Studies

A tutorial or explainer video would give your customer the information they need to understand how to use your software, but it might resonate better with them to use a video to tell the story behind the origins of your product. Sure, your technical videos would spell out what problem your software is solving, but they won’t be able to tap into connections of relatability that might be achieved by a video with a compelling story on how you came up with your product. Trust and loyalty can be earned by a product and company that consistently deliver what they promised, but you can add another layer to that connection by becoming relatable and personal to your customer.

That brings us to client testimonials. Depending on their length or how they are delivered, testimonials can be a once-over or your customer will be able to find one or two with similar experiences advocating the effectiveness of your software. Written testimonials are fine and the norm, but you might consider having a few published in video format. Depending on your research, you might even be able to use your video testimonials in your outbound marketing.

And they don’t all have to be too straight or technical; an effervescent and witty one-liner from a supporter that drew a chuckle from you might have the same effect on visitors to your website and lighten things up.

If applicable, case studies can be employed for the combined effect of walkthroughs and testimonials. While it’s usually delivered from the technical perspective of the company, it would pander to a tech-savvy customer who is looking for a more in-depth or niche look at how your software can be applied. Bonus points too if the client using the software in the case study is well-known and well-respected. But don’t let all that technical stuff limit you to jargon and technobabble; consider using language that’s less robotic or monotone and more conversational and organic, taking the opportunity whenever possible to explain or simplify certain techspeak terms or to translate highly specialized situations into simple, relatable language.

Client success stories can also function as a case study or be as concise as a testimonial. In addition, you can also utilize or embed webinar recordings in your website. The goal of these elements in your website is to demonstrate to your would-be customers that their trust and confidence would be well-placed in both your software and your company.

Copyright Fauxels (Pexels)

Online and Offline Community Marketing

Don’t just limit that trust and confidence to the confines of your website, but expand it to the greater community out there. You’ve developed your blogs, guides/tutorials, multimedia, case studies and other materials on your website with the mindset of not only being informative and instructional, but also engaging at the same time, so the same content should work just as well on social media and the like.

Yes, you are promoting your software and company on social media but instead of being overly promotional, provide value to your target audience by directing your content toward addressing common pain points and questions. This way, you establish yourself as a thought leader while connecting with your audience at the same time.

Don’t overload your posts with information, though; you can use the “breadcrumb” approach where you open with a particular pain point and lightly touch on what your software can do to solve it and then add a backlink to your website if they would like to learn more. Aside from sharing a whole video or webinar, you can also lift a particularly interesting section from it to share on social media along with a link where they can watch the full content. Break the monotony every now and then by sharing funny or witty content that’s either relevant to your software or industry as well as a particular occasion or holiday.

Be mindful of the audience and the platform you’re sharing your content with as well; your entertaining and engaging videos would connect well with the Facebook or Twitter crowd, but the more technical stuff such as case studies and webinars might be better received by industry experts, potential professional connections and decision-makers on LinkedIn.

Just as you would engage with your audience, potential and existing customers and their questions and concerns on your social media accounts, you might as well do the same at the forum threads of Quora, Reddit, and other online communities to help build brand awareness and your industry expert reputation.

Knowing or understanding your target audience and the platform would also help lead to tapping into influencer marketing, as you observe the most relevant online personalities drawing the most engagement with likes, reactions, shares, retweets, shoutouts, and more. The same can be applied for other companies you can consider partnering with or guest blogging for, as well as other products you can integrate with, allowing you to connect to new customer bases you wouldn’t have otherwise reached. Of course, you would still need to carefully evaluate the pros and any cons of partnering with a particular influencer, company or product.

In addition to a strong online presence, there’s a larger offline community that you shouldn’t forget to connect with as well. Make your presence felt in the real-world by customers, decision-makers, and other field professionals by attending industry events, hosting live demos, participating in local community events or if possible, sponsorship programs.

And what could also be more real to a would-be customer than someone they know who not only uses your product but has good things to say about it? Most marketing strategies focus on new customer acquisition, but it’s been proven time and time again that it’s much more cost-effective to retain an existing client versus acquiring a new one. A key point to keep in mind is to treat an existing client like a new customer by also extending to them special offers from time to time like free trials, limited time promotions, or exclusive first access to new or upcoming features. This sense of exclusivity can help counter feelings and thoughts that only the new customers are getting the better deals. The current customers have, in fact, rightfully earned the privileges of loyalty. Imagine how much more powerful and transformative it is to have positive word-of-mouth generated by a long-time customer advocating in your behalf, thanks to this sense of exclusivity and belonging they feel.

Copyright geralt (Pixabay)

Like we’ve noted earlier, optimizing the user experience from prospect to advocate based on the perspective of your customer goes hand-in-hand with the conventional software marketing approach of identifying and targeting your ideal consumer. It might be a novel approach to you, or you already have a lot on your plate with the myriad of software marketing strategies available, but you just couldn’t dare leave any stone unturned; worry not, as you can rely on one market research company who has been performing highly innovative and consistent marketing research work for thirty years and counting.

With a variety of services, Cascade Strategies has been conducting, adapting, and innovating market research studies for over three decades for an honor roll of respected clients. In addition to incorporating ground-breaking methodologies, Cascade Strategies also takes full advantage of the high level of professional research experience and imagination our staff brings to the table to uncover breakthroughs leading to the best marketing solutions for your company. Contact Cascade Strategies today to learn how we can help your software development company do a better job of targeting new customers.

Read more

11

Mar

Why Is Market Segmentation Effective?

By now you’ve most likely come across the idea that instead of using the “blanket” approach for marketing by using demographic or geographic data, you and your marketing goals can be better served by identifying your ideal customer and then focusing and tailoring your marketing campaign towards that consumer. This is achieved through a high-quality segmentation study and persona development, as was the case with the “Strivers” and “Empath” personas in our Banner Bank and Capital One case studies, respectively.

To sum it up, when we developed a brand model identifying “Strivers” as the primary segment Banner Bank should focus on, they were able to not only meet but also exceed all key Striver product targets system-wide after two years of implementing the program. Also, we recommended Capital One focus their brand campaign efforts for a personal investment mobile app on the pragmatic thinking type “Empath,” resulting in a highly successful new product introduction. You can learn more about these two case studies and how great research can help financial services companies here.

By recognizing your profit-optimal customer through market segmentation, a financial services company can effectively focus its marketing efforts and resources, optimizing or helping drive down costs, while at the same time engaging more efficiently with the consumer, enhancing satisfaction and loyalty.

But what if we tell you that you can also segment your financial services market so you target not one but different groups of customers?

Copyright geralt (Pixabay)

Single-segment Focus vs. Multi-segment Strategies

“Now hold on a minute,” you might say in your mind as you read that last line. “Didn’t you just say at the beginning of this that identifying your best customer is better than the ‘blanket’ approach?”

Yes, we did say that but no, this is no “blanket” approach. The main reason a financial services company wants to complete market segmentation research is so they can gain actionable insights into how to sell more of their products or services. With high-quality segmentation studies, breaking down your financial services market into different groups uncovers a variety of insights allowing you to craft and leverage different marketing strategies toward these segments. With this data-driven approach, customer segmentation helps financial services companies decide how to offer a customized journey to different kinds of consumers. It also provides the opportunity to tap into niche segments, which are usually smaller groups with considerable potential.

Think of it this way: instead of a blanket, what you have is a different set of marketing playbooks for your various customer segments. The blanket covers primarily the “who” of your market; each of your playbooks identifies not only “who” they are for but also deep dive into answering questions like “what” type of buyer behavior they have, “why” they behave this way, and “how” best to approach and engage them.

Copyright Gerd Altmann

Why Use Psychographic Segmentation?

Generally, four types of market segmentations can provide a financial services company with actionable segments: Geographic Segmentation, Demographic Segmentation, Behavioral Segmentation, and Attitudinal or Psychographic Segmentation. Out of these four, we’ll be focusing on Attitudinal or Psychographic Segmentation, as it is often considered the most useful way to segment an audience.

Attitudinal or Psychographic Segmentation separates customers by how they think and feel, their attitudes and values. Essentially, it aims to become a window into a buyer’s thought process. It is often considered the most useful segmentation approach because it provides the clearest actionable steps for a company to take as they try to target each segment.

Not only do you gain a deeper understanding of who your customer is, but your financial institution can map out the customer journey more effectively and efficiently with Psychographic Segmentation. It also allows the financial services company to recognize opportunities to offer different or new products/services in response to changes in consumer behavior. In addition to improved customer satisfaction and retention resulting from a client feeling valued, a financial institution that effectively engages with its consumers can also enjoy increased brand perception, helping with word-of-mouth and referrals as well as stand out from the competition.

Segmentation study data can come from several sources including survey data, observational data, public panel data, customer relationship management (CRM) databases, and even large-scale public databases such as Data Axle (previously InfoUSA), Experian, LiveRamp (previously Acxiom), and the like. It’s also possible to append demographic and behavioral data to your company’s house list. A financial services company is already sitting on a large pool of customer data; while it’s easy to go down the route of Geographic or Demographic Segmentation when analyzing all that information, converting those data into actionable insights with Psychographic Segmentation would lead to more personalized and meaningful buyer experiences.

Whether it’s for single or multiple segments, Psychographic Segmentation studies can tell you why a particular marketing or messaging approach to a particular segment is likely to be profitable. They can also tell you how to make adjustments toward more effective approaches when the standard approaches are not working. There are many financial services companies that tried demographic targeting and were disappointed with the results, then switched to psychographic targeting and found that their messaging strategies produced much higher rates of response and conversion.

Copyright Andrea Piacquadio

Cascade Strategies combines the most advanced AI and machine learning tools with market research expertise from over three decades of experience. Let us help your financial services firm convert your customer data into valuable, real, and actionable business insights. Don’t settle for a simple breakdown of your customer data; our experienced team strives for genuine breakthroughs by imaginatively interpreting all that complex quantitative segmentation data. We can also develop creative briefs that can be used for your advertising and website strategy based on the segments we discover, as well as tackle practical tasks, such as predicting the likely revenue to flow from campaigns directed toward specific consumer segments and measuring the actual monetary effectiveness of such campaigns. Contact Cascade Strategies today to see how our approach to segmentation studies can give you real business insights.

Read more

27

Feb

What Are The Challenges of Assisted Living Facilities (ALF) Marketing?

With a value of almost $92 billion in 2022 driven by almost 70 million baby boomers in the United States turning 65 between 2011 and 2030, the assisted living industry is poised to continue growing in the coming years. Despite the ever-growing demand, assisted living facilities (ALF) find that they must stand out from the competition in order to gain the attention of potential residents. Add to that a sizable portion of people surveyed expressing a lack of trust with assisted living stemming from the time of the pandemic and you have quite the challenge for any ALF looking to cater to their portion of an increasingly aging population.

What Are The Types of ALF Marketing Strategies?

This is where having an effective marketing plan for your ALF comes in. This includes creating a strong and memorable brand identity representing your facility’s goals and values in its logo, slogan, and mission statement. You’ll also need to optimize your website not only with strategically placed Calls-To-Action (CTA) but also with an intuitive and mobile-friendly interface. Your blog and social media should offer relevant and noteworthy content that leans toward the concrete rather than the inspirational, with transparency being key. Companies and even nonprofits have also been utilizing periodic newsletters or e-mail marketing campaigns, video marketing, and the like in their marketing plans; however, we’d like to take your ALF marketing efforts one step further by highlighting some strategies in this post that would help differentiate you from your competitors.

Copyright Andrea Piacquadio

Who Are Your Audiences for ALF Marketing?

First we need to identify the audience for your ALF marketing. The seniors are of course top of the list, but don’t just limit yourself to first-time residents; there might be residents of other ALF’s that aren’t quite happy with their present arrangements and might be looking to transfer to other facilities that could meet their needs. Seniors value not only the accommodations that would be presented to them but also the amount of independence they could enjoy during their residency, so don’t forget to highlight the activities and socialization your ALF offers aside from facility features.

The children of the potential resident (and in some cases, their spouse, other family members, or guardian) are most often involved with making the decision on which ALF to choose for their loved one. While some seniors have adapted to using computers and smartphones, their children thrived in the digital age and would most likely be looking and researching online for the ALF that best suits the needs of their parent, valuing more the safety and care offered by the facility. Thus, being transparent and specific in your messaging on what service and accommodations your facility offers would help in gaining the trust of the children of the prospective resident.

Although they might not be decision-makers, doctors, social workers, and organizations concerned with senior care are also keeping an eye out for facilities to recommend to their patients considering assisted living. Your ALF marketing would register on their radars. By fostering a credible and well-deserved reputation built on trust and quality service, you can land and keep your facility in their shortlists.

Copyright Andrea Piacquadio

What Advanced ALF Marketing Strategies Target New Residents Most Effectively?

Now that we’ve identified our audiences and generically discussed some strategies typically used in a marketing plan, let’s talk more specifically about some advanced ALF marketing strategies to help you stand out from the rest.

Search Engine Optimization (SEO) – You’ve optimized your website with high-quality content and images, but if it’s not primed for search engines, you’re missing out on generating leads. By using relevant keywords on your website pages and blogs, your website can rank better in search results to make it to the top of the search page. This will help in making your ALF stand out as reputable and an expert in the field.

You can start by researching keywords used by people searching for ALF in your local area. Use common keywords over industry terms — instead of using “assisted living,” prospects might be using “nursing home” or “retirement home.” We also recommend building your website on WordPress, WIX, or similar platforms that offer SEO plugins to help track and measure traffic to your site and give you an idea of which keywords are working best. Look into utilizing Google Analytics for staying on top of your website traffic and conversion, then adapt or adjust your pages and strategies according to those insights.

Paid Digital Ads (Google Ads, Facebook) – Another advantage of using keywords is that you can use them for targeted marketing efforts such as Google paid ads and social media, such as Facebook and Instagram. Using paid digital ads in combination with SEO will help maximize the visibility of your ALF by bringing it closer to audiences who might not have otherwise learned or heard about your facility through traditional means.

Aside from uncovering relevant keywords that potential residents and their families are using, you would want to start targeting local prospects. You can target outside your local area, but this works better if your region offers a unique quality not found in other places, such as warm weather. Seniors and their families would most likely prefer moving into a facility close to one another, for easier and more frequent visits, among other reasons. Targeting local people would be key in solidifying both your ALF’s online and local presence — a baseline to consider before expanding to targeting other areas.

As we’ve mentioned earlier, your target demographic isn’t limited to seniors only, as your campaign can also include ads appealing to their immediate family members or guardians. Your Google or Facebook ads would need to be engaging, appealing, and relevant to your target audience for better lead conversion. You might want to utilize ad types that grant you more control options over location and audience targeting.

Your ads need to sell what sets your ALF apart from other local choices. You might even want to offer incentives like a free tour or consultation to get people to sign up and provide their contact details. Digital advertising won’t be a one-time process, so you might need to tweak your ads from time to time as you learn more about what works and what doesn’t.

Copyright RDNE Stock Project

Referrals/Reviews/Testimonials – Convert into potential leads all that goodwill and outstanding reputation you’ve built on a foundation of good service and trust by asking the family and friends of the resident to pass along some good words about your facility to anyone they think is looking for ALF’s. Or better yet, why not ask them for a testimonial you can add to your website — either in written form or an embedded YouTube video? Maybe they could leave a positive review about your ALF on social media or similar platforms. You might also consider implementing a referral program with incentives like discounts or gift cards for the loved ones in your care, or networking with doctors, social workers, and the like, by giving them brochures or leaflets about your ALF. Positive word-of-mouth is just as effective and powerful as any traditional or digital marketing tool you can utilize.

Promote Your ALF on Senior-focused Platforms – Social media platforms like Facebook, Instagram, and even YouTube offer avenues for engaging and attracting prospects with interesting content and updates, while LinkedIn allows your ALF to widen its professional network. However, there are some senior-focused platforms that your marketing can tap into that other ALF’s might have overlooked such as healthcare websites, senior lifestyle blogs, or local community forums. Seniorliving.org has provided a great list of websites dedicated to seniors that is worth checking out.

Community Involvement – Demonstrate corporate social responsibility by getting involved or giving back to your community by volunteering or sponsoring events. You might even want to consider partnering with other businesses or even a nonprofit with similar values. Not only does your ALF benefit from the positive publicity and word-of-mouth, but it also builds relationships and goodwill in the community.

It should be noted that referrals, senior-focused platforms, and community involvement don’t target prospects the way media do, but they allow the ALF to obtain leads and then assess or vet ideal matches for their facility from this pool . In some cases, they might even be able to refer certain personalities that don’t fit with them to other ALF’s that are better suited to their needs, generating goodwill not only with other facilities but also with the seniors and their families, contributing to a reputation that the welfare and well-being of the resident are far more important than monetary gain.

Copyright Andrea Piacquadio

Of course, you can also partner with Cascade Strategies to develop an optimal and effective marketing plan for your ALF. In addition to audience segmentation and brand development, Cascade Strategies can also help you formulate an effective marketing plan that includes SEO and paid digital ad marketing. Backed by over three decades of market research experience serving a veritable honor roll of leading US and international companies, Cascade Strategies can help your ALF accomplish a better job of targeting new residents in the changing and growing landscape of the assisted living industry.

Read more

09

Feb

Why Acquire New Donors for Your Nonprofit Organization?

They say it’s easier and less costly to keep and maintain existing clients than acquire new ones. The same can be applied to nonprofit donors. But don’t let that stop you from getting new donors to join and support your cause.

Existing donors once were prospects. You acquired them one way or another, formed and built a relationship with them, and nurtured that connection until you had earned their trust and confidence enough to manifest itself in the form of financial or in-kind support. And when that relationship reaches a lull or break concerning the flow of donations, it sure is nice to have new ones come in to fill the gap.

Even if you’re not looking to start a specific, measurable, attainable, relevant, and time-based marketing plan now or have a tried-and-tested formula for gaining new donors, it doesn’t hurt to revisit the process of new donor acquisition and find opportunities for improvement or refinement.

Copyright josephvm (Pixabay)

How To Acquire New Donors for Your Nonprofit Organization

If you happen to be looking up articles on Google about new donor acquisition, you might be so surprised at the amount and diversity of content available that you begin wondering where to even start. Don’t worry- we feel the same way you did when we Googled the same topic. We boiled down most of the tips you’ll find online on acquiring new donors to the following shortlist:

- Improve/Optimize Your Website – Most if not all of the time, your marketing is going to redirect prospective donors to your website, so it should be in the best state it could be with Call-To-Action (CTA) messaging and “Donate Now” buttons conspicuously and strategically placed. Your website should be user-friendly and accessible enough for prospects to explore and grow familiar with. Prime your website to convert visitors to email contacts by asking them to subscribe to newsletters or offering access to gated content.

- Write Blogs – After your mission and vision statements, your blog posts are your next best tools in elaborating the importance of your cause as well as relating and connecting with your audience. From sharing anecdotes and insights on what you do to homing in on the reasons why they should donate to expounding on the impact and influence of their actions, this is a space in your marketing that would serve you well when tapped into and maintained with regular posts.

- Social Media Content – Everyone and everybody you know is on social media these days, so you won’t want to miss out on the opportunity to get on the radar of potential donors or supporters on popular platforms like Facebook, Instagram, LinkedIn and X (formerly known as Twitter), then drive them to your social media page or website. You can even get creative in this space with surveys, online quizzes, and personality matches. Aside from reaching out to your target audience with customized messaging, social media networks open doors to connect with younger generations. They may not have the same wherewithal as their elders, but these young ones can be just as passionate about causes they believe in and support through likes, reacts, comments, shares, and follows, with the resulting engagement helping you widen your reach.

- Host In-Person or Virtual Events – Some potential donors or volunteers appreciate being able to put a face to an organization and no, this is not about just some headshot of a smiling representative. Instead, they’d like to have an actual person to interact and engage with, and this is an audience you can tap into with in-person or virtual events. Don’t limit yourself to just your events; attend public events that are not only high-profile or trending but also relevant to what your nonprofit represents.

- Paid Media Ads – For a low cost, you can build your email list based on a targeted audience from paid ads on Facebook and X/Twitter. You can also build one through Care2.com with paid sponsored petitions. LinkedIn and YouTube also offer paid ads targeting a specific demography. Investing in an engaging YouTube ad also helps build brand awareness.

- Word of Mouth/Referrals – Don’t forget to tap into your existing donors or supporters for ideal referrals or requests for funds from their circle of family, friends, and acquaintances on your behalf. Your current and active supporters are your best advocates and can contribute to your mission of acquiring new donors just as well as any of your other marketing campaigns — and usually at little to no cost! Be sure to also capture that passionate support in the form of a testimonial published on your website or shared on your social media and other marketing venues.

- E-newsletters – You can’t expect your potential donors to visit your website or social media pages regularly to find out about the latest news about your nonprofit organization, but you can keep them up to date by sending them your periodic e-newsletter. Your e-newsletter is a powerful tool in not only keeping your prospects updated but also in nurturing and guiding the relationship you’ve formed across the time it’ll take until they make their first donation.

Copyright Mohamed_hassan (Pixabay)

Advanced Recommendations for Acquiring New Donors for Your Nonprofit Organization

Of course, it’s no surprise anymore if other similar nonprofits are already doing the aforementioned things to recruit new donors. One strategy in play is to reach out to people who have donated to similar nonprofit organizations. As you can see, you would need to take things one step further to either stand out from the competition or identify the ideal prospect before they even donate.

One way to do this is by soliciting referrals from your current donors or supporters with the “Booster” persona. In a brand equity tracking study we completed for United Way of King County, we identified the “Booster” persona as the donor type with the most positive feelings toward the nonprofit organization. They are affluent, mostly in the 35-to-54-year-old age range with children in the household, and are more likely to be employed full-time than other donor types. They are also reasonably well-educated and optimistic, with greater faith or general trust in charitable organizations; they tend to donate to United Way more than the average. This “Booster” is simply the best candidate to put in a good word for your nonprofit and potentially convert prospects from within their circles to your new donors.

Another way is to identify the “Benefactor” persona from potential donors from your email list. We conducted a donor profile report for The St. Vincent de Paul Society, and we found that while all donor households are generally affluent, there’s some evidence that Financial Donors tend to be more often found in households that are more “traditional,” if you will, than In-kind Donors. This “Benefactor” persona is more often married and typically has a male-led household, in database terms. Their length of residence is generally greater than that of In-kind Donors and it’s easier for us to confirm the homeownership status of a Financial Donor household. We can look up “Benefactor” personas using email lists for Facebook and LinkedIn and cross-check their statuses between these two social media platforms.

Copyright Vector_indo (Pixabay)

Not-so-affluent target donor groups

While the examples above are from affluent groups, you can’t discount the untapped possibilities other audiences represent. In the same report for The St. Vincent de Paul Society, we grouped the younger generation, households with children, and the middle and the working class into the “Great Middle,” which is a section of the larger community that isn’t as involved in philanthropic pursuits. We have recommended engaging the “Great Middle” by developing an outreach program to tell them about the good work of the nonprofit and inviting them to participate. We suggested communicating via social networking, blogs, email campaigns, e-newsletters, podcasts/webcasts, online forums/chatrooms, and traditional media.

Younger generations

The ability and desire of younger generations to become your donors should not be discounted. Constant Contact divides the younger generations into two main groups: Generation Z (born between 1996 and 2010) and Millennials (born between 1977 and 1995). In addition to social media influence, the younger ones can prove to be a valuable resource for volunteer efforts and getting more people their age to contribute to your cause. They’re also quite willing to donate, with 59% of Gen Z and 84% of millennials donating to charity. The younger generations are just as important as any other group of advocates, so be sure not to overlook them by connecting and engaging long-term with them via email and social media along with casual, fun events.

Partnering with business

You might also consider partnering with businesses or corporations looking to demonstrate their corporate values. Seek out businesses with similar values to yours and explore how you can work together, whether it’s hosting events or fundraisers, volunteer opportunities, matching customer or employee donations, or even corporate grants. Such partnerships offer the business positive publicity and an improved culture of social responsibility while granting you exposure to new groups and resources that you wouldn’t otherwise have been able to find or access.

In the same vein, you might want to partner with similar nonprofit organizations. As we’ve mentioned earlier, one tactic being used is to reach out to people who have donated to similar nonprofits, so you might as well make it official with friendly organizations. You can host joint events, trade mailing or e-mail lists, or one can appeal to their donor base for the benefit of the other and vice versa. Who knows, but there might be some donors who are looking to donate to another similar nonprofit.

Copyright Liza Summer

Giving Tuesday

Don’t forget to take advantage of Giving Tuesday, which is the Tuesday after Thanksgiving. As this global event of giving grows more popular each year, you have one of the best opportunities to acquire new donors by promoting your organization’s cause and mission.

While there may be other times of the year when people are just as generous, these occasions may not be as strongly associated with donating as Giving Tuesday. Your organization can discover which of these other times are most advantageous to you by keeping track of peak donation periods throughout the year. Any data you’ve recorded and maintained from all the activities of your nonprofit organization help refine your new donor acquisition plans.

Those data can be converted into comprehensible and actionable insights by partnering with Cascade Strategies. Whether it’s audience segmentation studies, brand development studies, or even strategic consulting to find the best ways to focus on the needs and preferences of your best donors, Cascade Strategies can help you and your nonprofit organization craft the best plan to help you attract and retain new donors.

Read more

29

Jan

What is Nonprofit Marketing?

Effective marketing allows businesses to thrive and profit, but it is just as important for nonprofit organizations. You could even say that coming up with a strong marketing strategy is even more crucial for nonprofit organizations, given their limited resources and manpower.

With competent and dynamic nonprofit marketing, a nonprofit organization can promote its cause, ideals, and services; at the same time, it can raise funds or recurring donations as well as call the attention of would-be volunteers, sponsors, or supporters.

What are the Types of Nonprofit Marketing?

Nonprofit marketing campaigns come in a variety of types ranging from email, social media, video, website, content, digital, and even in public speaking form. Investopedia highlights the following three nonprofit marketing campaigns:

- Point-of-Sale Campaign – A donation is solicited during the checkout process for an online or in-person purchase a customer is making.

- Message-Focused Campaign (Event Marketing) – This type of strategy promotes a nonprofit organization by utilizing in-person or online events, most especially the trending or high-profile kind. Aside from focusing on the mission of the organization, they also use the opportunity to raise funds as well as encourage volunteerism for and support of their cause.

- Transactional Campaign (Partnership Marketing) – The nonprofit organization teams up with a for-profit business (or businesses). The corporate partner promotes the nonprofit organization’s cause by pledging to donate a portion of certain purchases towards the latter’s fundraising efforts. In some cases, the business utilizes social media wherein they pledge to donate to their nonprofit partner for every like or share of their promotional post. This campaign also allows the business to underscore its corporate values and in turn, generate positive publicity.

Copyright RDNE Stock Project

Advanced Forms of Nonprofit Marketing

Forbes.com has noted that nonprofit organizations function differently from traditional businesses so some online marketing strategies may not work well with nonprofits. Recognizing this disparity, online giants like Google and YouTube have come up with customized options to help with nonprofit marketing.

- Google Grants for AdWords – This program gives a nonprofit $10,000 per month in AdWords credit for advertising in Google. However, there are strict eligibility requirements, such as a current 501(c) (3) status for valid charities. There are also rules to follow to keep the grant like your ad must be focused on sending people to your website and prohibiting displaying ads from Google AdSense. The application and waiting process takes time, too. You can also visit the Google for Nonprofits page for more information on Google Apps for nonprofits.

- YouTube Nonprofit Program – Instead of money, this program provides additional features and benefits for your YouTube videos such as a donate button, live streaming, and call-to-action overlays (which send viewers to a website or specific webpage when clicked). Members of the program can also apply separately for production resources which allows you to get production access to shoot or edit your video at YouTube’s Los Angeles studio. The eligibility requirements for the program are identical to Google Grants, but you need to already have a YouTube channel with some good videos uploaded before applying.

- Facebook Nonprofit Opportunities – While they don’t offer a program for nonprofits, there are a lot of different apps you can add to your Facebook page to support your nonprofit cause. DonateApp and JustGiving are a couple of apps that can help add a “Donate Now” button to your Facebook page, allowing a supporter to donate at their convenience here instead of heading over to your website to make the same.

Copyright Gustavo Fring

How to Create a Nonprofit Marketing Plan

- Set Marketing Goals – Are you looking to raise funds with your next campaign? Or maybe encourage more volunteers or supporters to help? Perhaps you want to drive more people to visit your website. You’ll need to know and understand first what you’re trying to achieve to determine the focus and direction of your marketing plan. Define your marketing goal with the SMART method– it should be specific, measurable, attainable, relevant, and time-based.

- Identify or segment your audiences – Once you have clear goals, you’ll then need to identify your target audience. You’ll want to optimize your nonprofit’s limited resources by customizing your marketing messaging to resonate with your intended audience. Or if you’re looking to target different groups, you’ll need to segment your audience into different personas so you’re able to adjust your campaign for each type.

- Define Your Marketing Message – With your goals and audience(s) set, you’ll now be able to craft the central messaging or theme of your campaign. This is especially important if you’re segmenting your audience- you’re customizing your messaging per persona but the central theme remains the same throughout. Your messaging must convey not only the importance of your cause but also why your audience should care about your efforts. It also must be inspiring and compelling enough to convince them to take action or at the very least, make a memorable impression.

- Choose Your Marketing Strategies – You now have the what, who, and why of your nonprofit marketing campaign; it’s now time to establish the how. Aside from email and social media, are you planning to do video or content marketing? Are there any businesses you can partner with maybe? Would an event work as well for this campaign? Depending on your target audience, your marketing plan may involve utilizing more than one platform for delivering your message.

- Analyze results and make adjustments – The goal and campaign type you’ve chosen come with clear key performance indicators to assess your progress. Sometimes you won’t get your intended results the first time but you can use the data or insights you’ve gained and use it to finetune or set baselines for your next campaigns. Just keep tracking and reviewing your data, making adjustments here and there or perhaps even dropping elements that don’t work.

Copyright RDNE Stock Project

Cascade Strategies can help you and your nonprofit organization create and develop your marketing plan. With over three decades of market research experience and top-of-the-line services that include Segmentation Studies and Qualitative Research, let us assist you in heightening awareness about your cause as well as finding the ideal volunteers, sponsors, and donors to support your ideals.

Read more

01

Nov

What Is Psychographic Segmentation?

So you’ve completed your research on the demographics of your online perfume store and you’ve seen that women in their twenties in Seattle were your top buyers. That’s great, you thought as your mind started to work on the outlines of your next campaign targeted towards these women. However, you discovered upon going through the data one more time that your perfumes are just as popular with forty-something-year-old women in Las Vegas. And when you went to double-check again you discovered another group of women around 25 years old being ardent supporters of your perfumes, but this time they’re from New York.

So you’ve completed your research on the demographics of your online perfume store and you’ve seen that women in their twenties in Seattle were your top buyers. That’s great, you thought as your mind started to work on the outlines of your next campaign targeted towards these women. However, you discovered upon going through the data one more time that your perfumes are just as popular with forty-something-year-old women in Las Vegas. And when you went to double-check again you discovered another group of women around 25 years old being ardent supporters of your perfumes, but this time they’re from New York.

Now how do you go about your marketing given that you would need to adjust it to target your top demographic? Sure, you’ve identified your best patrons as women between 20 and 50 years old but aside from the different locations, you’re not quite sure now what else sets them apart, which could poke holes in your messaging and cause it to fail to resonate with a number of them.

This exercise shows you the limitations of demographic-based marketing. Demographics answer the question “Who are your buyers?” but in order for your efforts to become more effective, you need to go deeper by answering “Why are they buying?” And this is where psychographic segmentation comes in.

Psychographic segmentation is the process of grouping consumers according to their motivations, goals, attitudes, opinions, beliefs and other psychological factors. It helps you better understand what drives purchase decisions. Not only does psychographic segmentation allow you diversify your marketing and reach out to different groups of consumers, it also allows you to create or customize products or services to cater to the varying needs of your buyers.

Copyright Elf-Moondance (Pixabay)

Why is Psychographic Segmentation Important in Brand Building?

Going back to the earlier scenario, you decided to reach out to your target demographic through an online survey, explaining it would help you understand them and serve their needs better. Based on the responses you received, you discovered that these women between 20 and 50 years old from different states appreciated the sweet-smelling but unique line of perfumes you’ve been selling at cost-effective pricing with efficient delivery times. Thus, the messaging of your next campaign highlighted the popularity of your sweet-scented perfumes, competitive pricing, and quick delivery. And the next time the opportunity presented itself, you even went as far as offering free delivery for a limited time.

Because you’ve used psychographic segmentation to break your market into different groups, you’ve also become aware of your other customer segments, which opened up marketing strategies you could leverage towards these subsets. Let’s say one of these groups was composed of regular clients who — although they didn’t buy as much as the earlier group we’ve discussed — you discovered frequently bought a certain perfume. Upon further research, surveys, and interviews of some of the members of this segment, you found that you’re the only online perfume shop that carried this fragrance. This then allowed you to branch out with new marketing which put a spotlight on the fact that this hard-to-find scent could only be bought at your online store, tapping into more potential customers falling under this segment. This also opened up more research on what fragrances your competitors didn’t offer but which your brand carried as well as the development of new unique perfumes that one wouldn’t find anywhere else online.

Psychographic segmentation not only gave you an understanding of the “why” behind purchases, it also granted you actionable insights on selling more of your products. With this data-driven approach, your brand is able to create different marketing playbooks for your various customer segments. The buyer’s journey would be different per customer, but in their minds there is only one brand that’s on top when it comes to a selection of unique scents at great prices and fast turnaround time for delivery.

Copyright Mohamed_hassan (Pixabay)

What Are Psychographic Segmentation Variables?

So how do you group your market according to your psychographic segmentation data? While there are several types of psychographic data on which you could base the customer segments you’ll be forming, indeed.com listed the following as the five main psychographic segmentation variables:

1. Personality – This variable refers to the beliefs, motivations, behaviors, and overall outlook of your target audience. You can group your customers based on personality traits like creativity, sociability, optimism, empathy, etc.

2. Lifestyle – This variable focuses on the daily habits and preferences of a customer, including how they spend their time and things they consider important.

3. Social class – This variable assumes preferences based on income level and spending power. It can also influence how a product is priced or whether it should be marketed as a luxury.

4. Attitudes – This variable considers the behavior of a customer based on their background and values. An example would be an animal lover who leans towards perfume brands that are known to be cruelty-free, meaning they don’t test their products on animals.

5. AIO (Activities, Interests and Opinions) – This variable groups consumers based on what they similarly enjoy or are passionate about. The second scenario earlier where you discovered the subset of regular customers purchasing the hard-to-find fragrance is an example of this variable.

Copyright geralt (Pixabay)

Personas vs. Psychographic Segmentation

While it might be easy to confuse psychographic segmentation with personas, these two concepts are subtly different. Psychographic segmentation groups your markets according to similar psychological traits and can therefore present a whole-market picture of consumers, spanning the range from those who passionately love your market offering to those who dislike it or resist it. This whole-market look also gives you the ability to attach real numbers to the data, enabling you to do things like demand forecasting, market sizing, receptivity studies based on counts of prospects, and the like.

Personas, on the other hand, are profiles — portraits of individual persons. They are more specific, detailed, and focused. Think of a police profile of a crime suspect (just the format of it, not the content.) A well-drawn persona presents a fictionalized representation of your ideal buyer, with information about key traits of that person. You might describe these traits by saying something like “likes to splurge on expensive vacations,” or “typically employed in middle-echelon white-collar jobs like administrative staff, etc.” The persona provides a vivid description of that individual, so you can better understand how to appeal to that kind of person with marketing campaigns and other forms of brand outreach. A good persona description humanizes the data and gives it a relatable face.

Please click here to find out more about segmentation studies, including some interesting case histories. Cascade Strategies has for over three decades been assisting top US and international companies with high quality market research and superior thinking in identifying and focusing on their most profit-optimal consumers. If you would like to find out more, or learn how Cascade Strategies can help provide brand development research for your specific marketing needs, feel free contact us here.

Read more

19

Sep

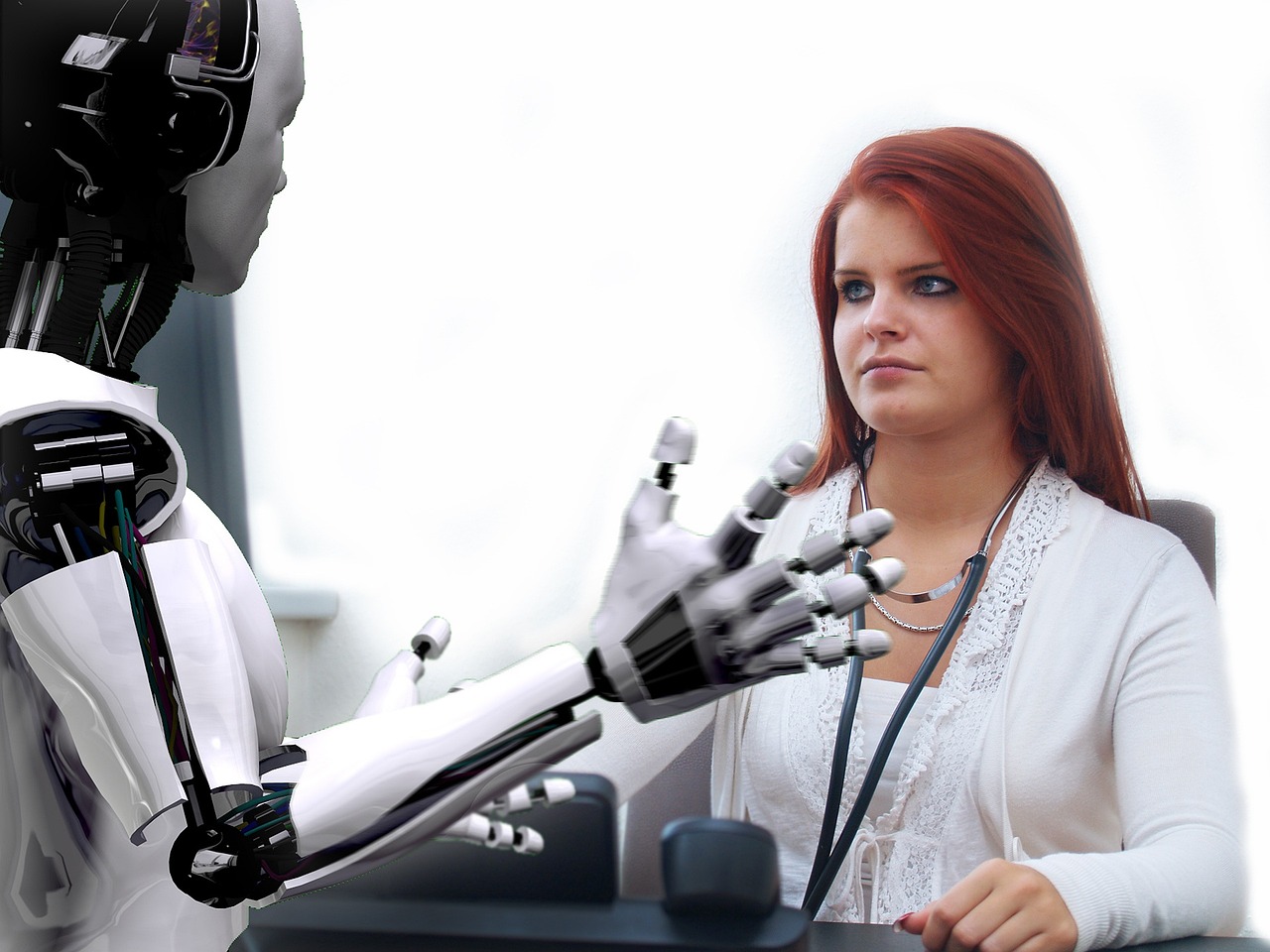

Longstanding Concerns Over AI

From an open letter endorsed by tech leaders like Elon Musk and Steve Wozniak which proposed a six-month pause on AI development to Henry Kissinger co-writing a book on the pitfalls of unchecked, self-learning machines, it may come as no surprise that AI’s mainstream rise comes with its own share of caution and warnings. But these worries didn’t pop up with the sudden popularity of AI apps like ChatGPT; rather, concerns over AI’s influence have existed decades long before, expressed even by one of its early researchers, Joseph Weizenbaum.

ELIZA

In his book Computer Power and Human Reason: From Judgment to Calculation (1976), Weizenbaum recounted how he gradually transitioned from exalting the advancement of computer technology to a cautionary, philosophical outlook on machines imitating human behavior. As encapsulated in a 1996 review of his book by Amy Stout, Weizenbaum created a natural-language processing system he called ELIZA which is capable of conversing in a human-like fashion. When ELIZA began to be considered by psychiatrists for human therapy and his own secretary interacted with it too personally for Weizenbaum’s comfort, it led him to start pondering philosophically on what would be lost when aspects of humanity are compromised for production and efficiency.

Copyright chenspec (Pixabay)

The Importance of Human Intelligence

Weizenbaum posits that human intelligence can’t be simply measured nor can it be restricted by rationality. Human intelligence isn’t just scientific as it is also artistic and creative. He remarked with the following on what a monopoly of scientific approach would stand for, “We can count, but we are rapidly forgetting how to say what is worth counting and why.”

Weizenbaum’s ambivalence towards computer technology is further supported by the distinction he made between deciding and choosing; a computer can make decisions based on its calculation and programming but it can not ultimately choose since that requires judgment which is capable of factoring in emotions, values, and experience. Choice fundamentally is a human quality. Thus, we shouldn’t leave the most important decisions to be made for us by machines but rather, resolve matters from a perspective of choice and human understanding.

AI and Human Intelligence in Market Research

In the field of market research, AI is being utilized to analyze a multitude of data to produce accurate and actionable results or insights. One such example is deep learning models which, as Health IT Analytics explains, filter data through a cascade of multiple layers. Each successive layer improves its result by using or “learning” from the output of the previous one. This means the more data deep learning models process, the more accurate the results they provide thanks to the continuing refinement of their ability to correlate and connect information.

While you can depend on the accuracy of AI-generated results, Cascade Strategies takes it one step further by applying a high level of human thinking. This allows Cascade Strategies to interpret and unravel insights a machine would’ve otherwise missed because it can only decide, not choose.

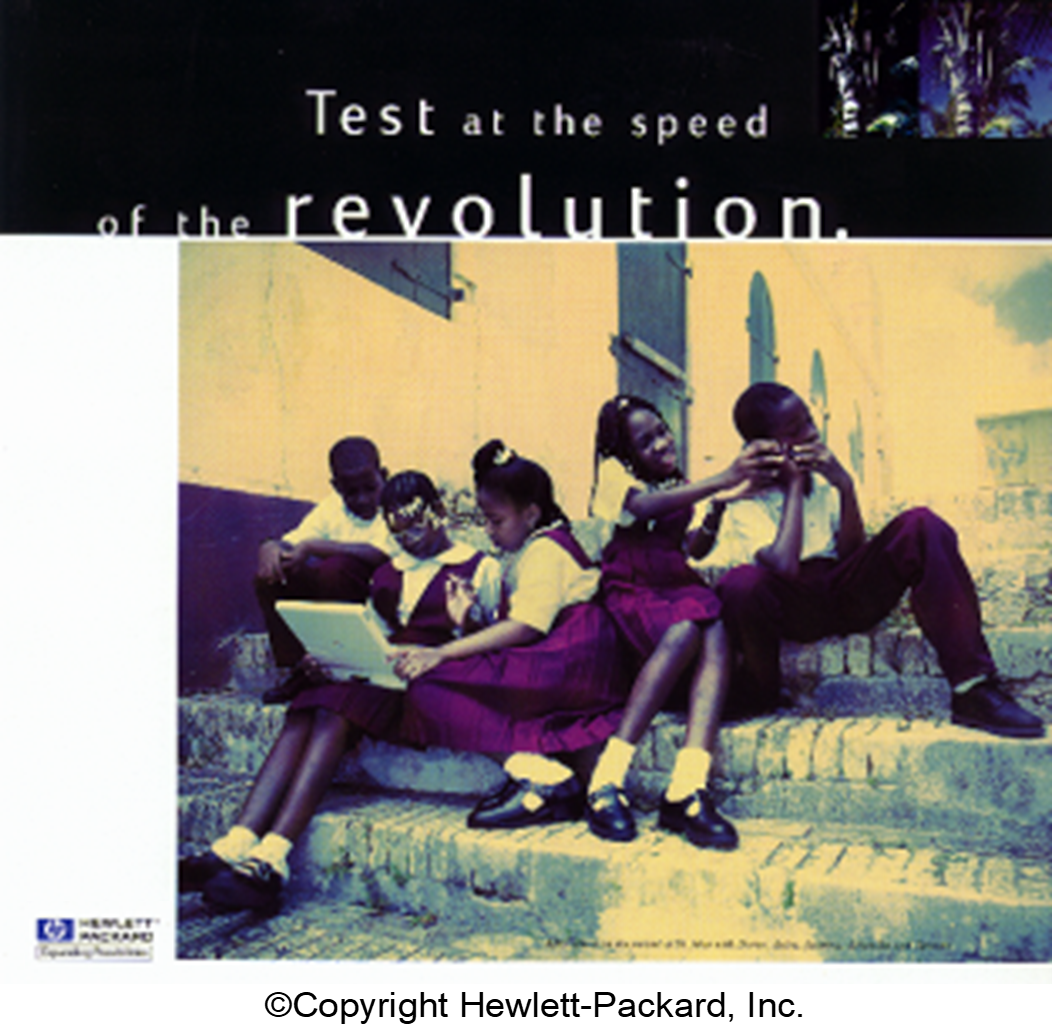

Take a look at the market research project we performed for HP to help create a new marketing campaign. As part of our efforts, we chose to employ very perceptive researchers to spend time with worldwide HP engineers as well as engineers from other companies.

This resulted in our researchers discovering that HP engineers showed greater qualities of “mentorship” than other engineers. Yes, conducting their own technical work was important but just as significant for them was the opportunity to impart to others, especially younger people, what they were doing and why what they were doing was important. This deeper level of understanding led the way for a different approach to expressing the meaning of the HP brand for people and ultimately resulted in the award-winning and profitable “Mentor” campaign.

If you’re tired of the hype about AI-generated market research results and would like more thoughtful and original solutions for your brand, choose the high level of intuitive, interpretive, and synthesis-building thinking Cascade Strategies brings to the table. Please visit https://cascadestrategies.com/ to learn more about Cascade Strategies and more examples of our better thinking for clients.

Read more

24

Aug

The Future Is Here

“Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.”

With just 22 words, we are ushered into a future once heralded in science fiction movies and literature of the past, a future our collective consciousness anticipated but has now taken us by surprise upon the realization of our unreadiness. It is a future where machines are intelligent enough to replicate a growing number of significant and specialized tasks. A future where machines are intelligent enough to not only threaten to replace the human workforce but humanity itself.

Published by the San Francisco-based Center for AI Safety, this 22-word statement was co-signed by leading tech figures such as Google DeepMind CEO Demis Hassabis and OpenAI CEO Sam Altman. Both have also expressed calls for caution before, joining the ranks of other tech specialists and executives like Elon Musk and Steve Wozniak.

Earlier in the year, Musk, Wozniak, and other tech leaders and experts endorsed an open letter proposing a six-month halt on AI research and development. The suggested pause is presumed to allow for time to determine and implement AI safety standards and protocols.

Max Tegmark, physicist and AI researcher at the Massachusetts Institute of Technology and co-founder of the Future of Life Institute, once held an optimistic view of the possibilities granted by AI but has now recently issued a warning. He remarked that humanity is failing this new technology’s challenge by rushing into the development and release of AI systems that aren’t fully understood or completely regulated.

Henry Kissinger himself co-wrote a book on the topic. In The Age of AI, Kissinger warned us about AI eventually becoming capable of making conclusions and decisions no human is able to consider or understand. This is a notion made more unsettling when taken into the context of everyday life and warfare.

Working With AI

We at Cascade Strategies wholeheartedly agree with this now emerging consensus and additionally, we believe that we’ve been obedient in upholding the responsible and conscientious use of AI. Not only have we long been advocating for the “Appropriate Use” of AI, but we’ve also made it a hallmark of how we find solutions for our client’s needs with market research and brand management.

Just consider the work we’ve done with the Expedia Group. For years, they’ve utilized a segmentation model to engage with their lodging partners by offering advice that could lead to the partner winning a booking over a competitor. AI filters through the thousands of possible recommendations to arrive at a shortlist of the best selections optimized for revenue.

With the continued growth and diversification of their partners, they then needed a more effective approach in engaging and appealing to them, something that focuses more on that associate’s behavior and motivations. We came up with two things for Expedia: a psychographic segmentation formed into subgroups based on patterns of thinking, feeling, and perceiving to explain and predict behavior, and more importantly, a Scenario Analyzer that utilizes the underlying AI model but now delivers recommendations in very action-oriented and compelling messaging tailor-fit for that specific partner.

The best part about the Scenario Analyzer is whether the partner follows any of the advice recommended or does nothing, Expedia still stands to make a profit while maintaining an image of personalized attentiveness to their partner’s needs. And ultimately, it’s the partner who gets to decide, not the AI.

Copyright Tara Winstead

Our Future With AI

This is how we view and approach AI- it’s not the end-all, be-all solution but rather an essential tool in increasing productivity and efficiency in tandem with excellent human thinking, judgment, and creativity. Yes, it is going to be part of our future but in line with the new consensus, we believe that AI shaped by human values and experience is the way to go with this emerging and exciting technology.

Read more

17

Aug

What Is Remediation?

The Cambridge Dictionary defines remediation as “the process of improving or correcting a situation.” Remediation programs are commonly employed in teaching and education wherein they address learning gaps by reteaching basic skills with a focus on core areas like reading and math. And as pointed out in an understood.org article, remedial programs are expanding in many places in our post-COVID 19 world.

In healthcare, there’s a wide range of remediation programs, or “remedial care,” diversified based on their end goal which may include smoking cessation, anti-obesity, weight reduction, diet improvement, exercise, heart-healthy living, alcoholism treatment, drug treatment, and more. But how do you identify the people who need remedial care the most?

Who Needs Remediation?

You might say you can tell who needs remedial care by just looking at the physical aspect of the prospective patient, but this is a shortsighted answer to the question. And what about those who need remedial care for a heart-healthy lifestyle? Surely you can’t tell a likely candidate for this remediation program with just one look alone.

It goes deeper than that. What if you, a healthcare representative, could only devote remedial care to a select few individuals given limited resources and time but you want to make sure that the whole remediation program is successful by achieving its intended goals? Just imagine all that time, effort and resources spent only for the patient to relapse back into their old ways not too long after program completion- or even in the middle of the remediation process itself.

Deep Learning and Remediation

This is where deep learning comes in. Also known as hierarchical learning or deep structured learning, Health IT Analytics defines deep learning as a type of machine learning that uses a layered algorithmic architecture to analyze data. In deep learning models, data is filtered through a cascade of multiple layers, with each successive layer using the output from the previous one to inform its results. Deep learning models can become more and more accurate as they process more data, essentially learning from previous results to refine their ability to make correlations and connections.

Deep learning models handle and process huge volumes of complex data through multi-layered analytics to provide fast, accurate, and actionable results or insights. When applied to the scenario we mentioned beforehand, deep learning filters through that multitude of patient data and prioritizes those who need remedial care the most.

You can also align its findings to effectively identify individuals who will not only return monetary value to your healthcare brand, but at the same time are most likely to “engage” or participate in programs offered by your company, such as wellness, diet, fitness or exercise. They can also be the best people to commit to avoiding poor lifestyle choices, such as overeating, smoking, and alcohol, helping guarantee the success of the remediation program.

With a combination of three decades of market research experience and conscientious use of AI, Cascade Strategies has been helping healthcare organizations develop advanced models to handle, filter and identify the likeliest of candidates for their program purposes. Cascade Strategies helps industry professionals not only recognize their ideal customers but also reach out to them with some of the most effective and award-winning marketing campaigns, thanks to our array of services such as Brand Development Research and Segmentation Studies. To see more examples of how we help leading worldwide companies achieve their goals, please visit our website.

Here are some of our suggestions for further reading on deep learning and healthcare:

https://builtin.com/artificial-intelligence/machine-learning-healthcare

https://research.aimultiple.com/deep-learning-in-healthcare/

https://healthitanalytics.com/features/types-of-deep-learning-their-uses-in-healthcare

Read more

10

Aug

The Impact of AI

In The Age of AI, which Henry Kissinger co-wrote with Eric Schmidt and Daniel Huttenlocher, Kissinger tried to warn us that AI would eventually have the capability to come up with conclusions or decisions that no human is able to consider or understand. Put another way, self-learning AI would become capable of making decisions beyond what humans programmed into it and base such conclusions on what it deems the most logical approach, regardless of how negative or devastating the consequences can be.

A common example to illustrate this point is how AI had already transformed games of strategy like chess, where given the chance to learn the game for itself instead of using plays programmed into it by the best human chess masters, it executed moves that have never crossed the human mind. And when playing with other computers that were limited by human-based strategies, the self-learning AI proved dominant.

When applied to the field of warfare, this could possibly mean AI proposing or even executing the most inhumane of plans regardless of human disagreement simply because it considers such a decision the most logical step to take.

The Influence of AI

As part of Kissinger’s warning, it’s been noted just how far-reaching AI’s influence already is in modern life, especially with its usage in innocuous things such as social media algorithms, grammar checkers, and the much-hyped ChatGPT. With the growing dependency on AI, there runs the risk of human thinking being eclipsed by machine-based efficiency and effectiveness. And how it arrives at such efficient and effective decisions becomes questionable because it could become difficult or near impossible to trace what it has learned along the way.

Just imagine someone making a decision influenced by the information fed to them by AI and yet failing to rationalize the thinking behind such a decision. That particular human may not realize it, but at that point they’re living in an AI world, where human decision-making is imitating machine decision-making rather than the reverse. It was this interchangeability Alan Turing was referring to with his famous postulate about artificial intelligence — the so-called “Turing Test” — which holds that you haven’t reached anything that can be fairly called AI until you can’t tell the difference.

Copyright Pavel Danilyuk

Appropriate Use of AI

However, it’s been pointed out that the book doesn’t follow “AI fatalism,” a common belief wherein AI is inevitable and humans are powerless to affect this inevitability. The authors wrote that we are still capable of controlling and shaping AI with our human values, its “appropriate use” as we at Cascade Strategies have been advocating for quite some time. We have the opportunity to limit or restrain what AI learns or align its decision-making with human values.

Kissinger had sounded the warning while others had already made calls to start limiting AI’s capabilities. We are hopeful that in the coming years, with the best modern thinkers and tech experts at the forefront, we progress to more of an AI-assisted world where human agency remains paramount instead of an AI-dominated world where inscrutable decisions are left up to the machines.

Read more

![]() So you’ve completed your research on the demographics of your online perfume store and you’ve seen that women in their twenties in Seattle were your top buyers. That’s great, you thought as your mind started to work on the outlines of your next campaign targeted towards these women. However, you discovered upon going through the data one more time that your perfumes are just as popular with forty-something-year-old women in Las Vegas. And when you went to double-check again you discovered another group of women around 25 years old being ardent supporters of your perfumes, but this time they’re from New York.

So you’ve completed your research on the demographics of your online perfume store and you’ve seen that women in their twenties in Seattle were your top buyers. That’s great, you thought as your mind started to work on the outlines of your next campaign targeted towards these women. However, you discovered upon going through the data one more time that your perfumes are just as popular with forty-something-year-old women in Las Vegas. And when you went to double-check again you discovered another group of women around 25 years old being ardent supporters of your perfumes, but this time they’re from New York.