Apr

AI’s Impact On Critical Thinking and Learning – What Studies Are Saying So Far

jerry9789 0 comments artificial intelligence, Burning Questions

Generative AI and Critical Thinking

On our last blog, we touched on two studies suggesting that Generative AI is making us dumber. One of those studies, which was published in the journal Societies, aimed to look deeper into GenAI’s impact on our critical thinking by surveying and interviewing over 600 UK participants of varying age groups and academic backgrounds. The study found “a significant negative correlation between frequent AI tool usage and critical thinking abilities, mediated by increased cognitive offloading.”

Cognitive offloading refers to the utilization of external tools and processes to simplify tasks or optimize productivity. Cognitive offloading has always raised concerns over the perceived decline of certain skills — in this instance, the dulling of one’s critical thinking. In fact, the study found that cognitive offloading was worse with younger participants who demonstrated higher reliance on AI tools and less aptitude when it comes to their own critical thinking skills.

Conversely, participants with higher educational backgrounds showed better command of their critical thinking no matter the degree of AI usage, putting more confidence in their own mental acuity than the AI-based outputs. Aligning with our advocacy for the “appropriate use of AI,” the study emphasizes the importance and appreciation of high-level human thinking over thoughtless and unmitigated adoption of AI technology.

Copyright: jambulboy

Generative AI and Learning

In truth, a number of earlier studies have revealed that the arbitrary adoption of AI tools can be detrimental to one’s ability to learn or develop new skills. A 2024 Wharton study on the impact of OpenAI’s GPT-4 demonstrated that unmitigated deployment of GenAI fostered overreliance on the technology as a “crutch” and led to poor performance when such tools are taken away. The field experiment involved 1,000 high school math students who, following a math lesson, were asked to solve a practice test. They were divided into three groups, with two of these groups having access to ChatGPT while the third had only their class notes. One group of students with ChatGPT performed 48 percent better than those without; however, a follow-up exam without the aid of any laptop or books saw the same students scoring worse by 17 percent than their peers who had only their notes.

What about the second group with the GenAI tutor? They not only performed 127 percent higher than the group without ChatGPT access on the first exam, but they also scored close to the latter during the follow-up exam. The difference? Sometime down the line of their interactions, the first group with ChatGPT access would prompt their AI tutor to divulge the answers, resulting in an increased reliance on GenAI to provide the solutions instead of making use of their own problem-solving abilities. On the other hand, the other group’s AI tutor version was customized to be closer to how real-world and highly effective tutors would interact with students: it would help by giving hints and providing feedback on the learner’s performance, but it would never directly give the answer.

Similar tests with a GenAI tutor in 2023 studied the same issue of AI dependence and the value of careful deployment of AI tools. Khanmigo, a GenAI tutor developed by Khan Academy, was voluntarily tested by Newark elementary school teachers, who belong to the largest public school system in New Jersey. They came back with mixed results, with some complaining that the AI tutor gave away answers, even incorrect ones in some cases, while others appreciated the bot’s usefulness as a “co-teacher.”

Other studies regarding the effectiveness of AI tutors have shown increases in learning and student engagement. These studies have also shown that GenAI can help reduce the time it takes to get through learning materials compared to traditional methods. One study that extolled the benefits of GenAI tutors involved Harvard undergraduates learning physics in 2024, and similar to the third group in the Wharton research, the AI was prevented from directly providing the answer to students. It would guide the student throughout the learning process one step at a time, providing incremental updates of the student’s progress, but never outright telling them the answer. There are merits to the idea of Generative AI as a teaching assistant, but it serves students better when it is positioned to engage one’s attention and abilities rather than induce dependence on it to generate the answers.

Copyright: Only-shot

Can We Use GenAI Without Making Us Dumber?

These studies shed light on how we should approach AI solutions and development, whether the end product is being deployed in learning, productivity or other relevant applications. Beyond thoughtful planning and considerations on how AI tools would be deployed, there should be a focus on engaging the human faculties involved, with safeguards empowering man throughout the entire process instead of letting the machine take over the process wholesale. AI technology is developing rapidly, but we can keep pace and remain reasonable as long as human engagement and empowerment is kept at the core of its utilization and adoption.

Amid contemporary fears that anyone could be replaced anytime by AI, these studies highlight the importance of how vital and interconnected the human factor is to the effective deployment and development of AI tools. One could be content with the constant and consistent output AI tools generate, but progress is only possible when competent human minds are involved in the process and direction. Students can easily find answers with AI tools at their disposal, but why not advance their understanding of how solutions are formed with engaging and relatable AI-powered educational experiences? High-level human thinking grounded by values and experience can’t be replicated by machines, and perhaps there’s no better time than now to incorporate it into the heart of the AI revolution.

While AI development hopes that optimization and automation free the human mind to go after bigger and more creative pursuits, we here at Cascade Strategies simply hope that humanity emerges from all of these advancements more and not less than what it as we entered the AI revolution.

Additional Reading:

Why AI is no substitute for human teachers – Megan Morrone, Axios

AI Tutors Can Work—With the Right Guardrails – Daniel Leonard, Edutopia

Featured Image Copyright: jallen_RTR

Top Image Copyright: danymena88

Mar

Are We Getting Dumber Because of AI?

jerry9789 0 comments artificial intelligence, Burning Questions

Is Generative AI making us dumber? Two recent studies suggest so.

A study published early this year titled “AI Tools in Society: Impacts on Cognitive Offloading and the Future of Critical Thinking” showed that growing dependence on AI could lead to a decline in critical thinking. Submitted by Michael Gerlich of the SBS Swiss Business School, the study was based on surveys and interviews of 666 UK participants from different age groups and academic backgrounds. The problem is more pronounced with younger participants who demonstrated increased reliance on AI to perform routine tasks and scored lower when it comes to critical thinking than their older counterparts.

More recently, a study by Microsoft and Carnegie Mellon University shared similar findings that the more workers depended on AI for their work, the duller their critical thinking becomes. The study surveyed 319 knowledge workers who used generative AI at least once a week and examined how and when they apply AI or their critical skills when performing tasks. The more faith the participant put in genAI to produce acceptable outcome, the less they use their critical thinking skills. On the other hand, participants who have higher confidence in their abilities than that of AI’s are found to exercise their critical thinking more out of concerns over unintended and overlooked machine output.

Copyright: Tara Winstead

What is Cognitive Offloading?

Both studies are linking overreliance on AI with cognitive offloading, which is when someone utilizes external tools or processes for completing tasks, resulting in their reduced engagement with deep, reflective thinking. Yes, AI is improving efficiency and saves time and financial costs, but these studies are suggesting that it could make humans less smart over time.

However, cognitive offloading isn’t new as it existed in a variety of forms throughout time, such as using a calculator instead of performing mental mathematics or simply making a grocery list instead of memorizing all the items you need to buy. It’s no surprise then that there are questions about the merits of the studies, such as self-reporting bias or how critical thinking was measured. Forbes suggests that AI isn’t making us dumb but lazy, while another emphasizes that in order for there to be harm to one’s critical thinking abilities, one must have critical thinking to begin with.

Copyright: Pavel Danilyuk

Rethinking AI Development

Nevertheless, these studies contribute to the conversation regarding the direction of genAI development, now with the nuance of being mindful and respectful of its human users’ intelligence and faculties. Recommendations include rethinking AI designs and processes which incorporates and engages human critical thinking. They’re helping bring back focus to AI serving as a tool augmenting instead of overtaking human capabilities.

For us at Cascade Strategies, we’re glad that these studies have renewed awareness and appreciation of human intelligence and creativity. Our world could’ve easily devolved into settling for more of the same output so it pleases us to learn that more voices are becoming advocates and proponents not only of the “appropriate use of AI” but also of high level human thinking.

Featured Image Copyright: Alex Knight

Top Image Copyright: Tara Winstead

Sep

What It Means to Choose or Decide In The Age of AI

jerry9789 0 comments artificial intelligence, Burning Questions

Longstanding Concerns Over AI

From an open letter endorsed by tech leaders like Elon Musk and Steve Wozniak which proposed a six-month pause on AI development to Henry Kissinger co-writing a book on the pitfalls of unchecked, self-learning machines, it may come as no surprise that AI’s mainstream rise comes with its own share of caution and warnings. But these worries didn’t pop up with the sudden popularity of AI apps like ChatGPT; rather, concerns over AI’s influence have existed decades long before, expressed even by one of its early researchers, Joseph Weizenbaum.

ELIZA

In his book Computer Power and Human Reason: From Judgment to Calculation (1976), Weizenbaum recounted how he gradually transitioned from exalting the advancement of computer technology to a cautionary, philosophical outlook on machines imitating human behavior. As encapsulated in a 1996 review of his book by Amy Stout, Weizenbaum created a natural-language processing system he called ELIZA which is capable of conversing in a human-like fashion. When ELIZA began to be considered by psychiatrists for human therapy and his own secretary interacted with it too personally for Weizenbaum’s comfort, it led him to start pondering philosophically on what would be lost when aspects of humanity are compromised for production and efficiency.

Copyright chenspec (Pixabay)

The Importance of Human Intelligence

Weizenbaum posits that human intelligence can’t be simply measured nor can it be restricted by rationality. Human intelligence isn’t just scientific as it is also artistic and creative. He remarked with the following on what a monopoly of scientific approach would stand for, “We can count, but we are rapidly forgetting how to say what is worth counting and why.”

Weizenbaum’s ambivalence towards computer technology is further supported by the distinction he made between deciding and choosing; a computer can make decisions based on its calculation and programming but it can not ultimately choose since that requires judgment which is capable of factoring in emotions, values, and experience. Choice fundamentally is a human quality. Thus, we shouldn’t leave the most important decisions to be made for us by machines but rather, resolve matters from a perspective of choice and human understanding.

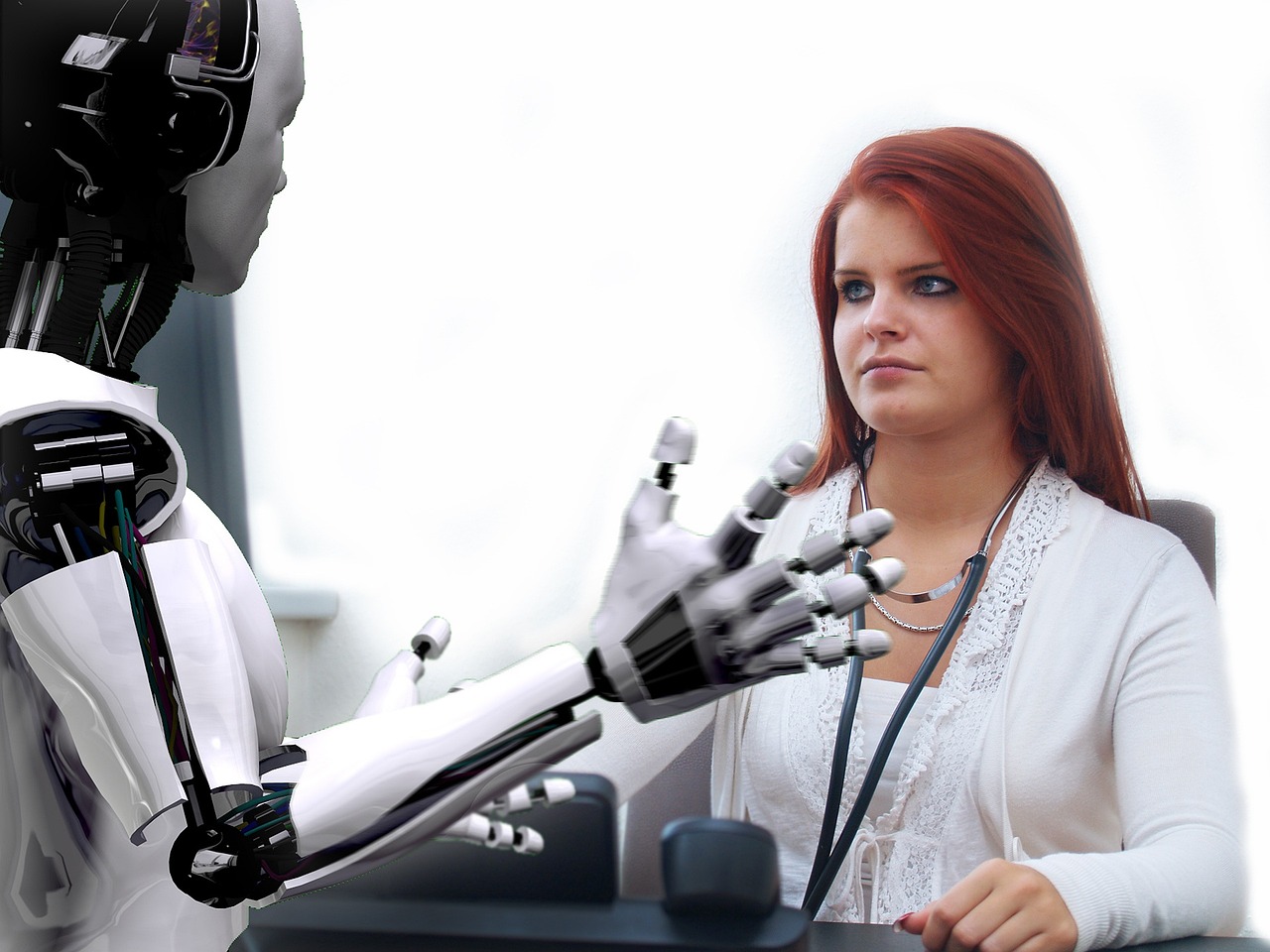

AI and Human Intelligence in Market Research

In the field of market research, AI is being utilized to analyze a multitude of data to produce accurate and actionable results or insights. One such example is deep learning models which, as Health IT Analytics explains, filter data through a cascade of multiple layers. Each successive layer improves its result by using or “learning” from the output of the previous one. This means the more data deep learning models process, the more accurate the results they provide thanks to the continuing refinement of their ability to correlate and connect information.

While you can depend on the accuracy of AI-generated results, Cascade Strategies takes it one step further by applying a high level of human thinking. This allows Cascade Strategies to interpret and unravel insights a machine would’ve otherwise missed because it can only decide, not choose.

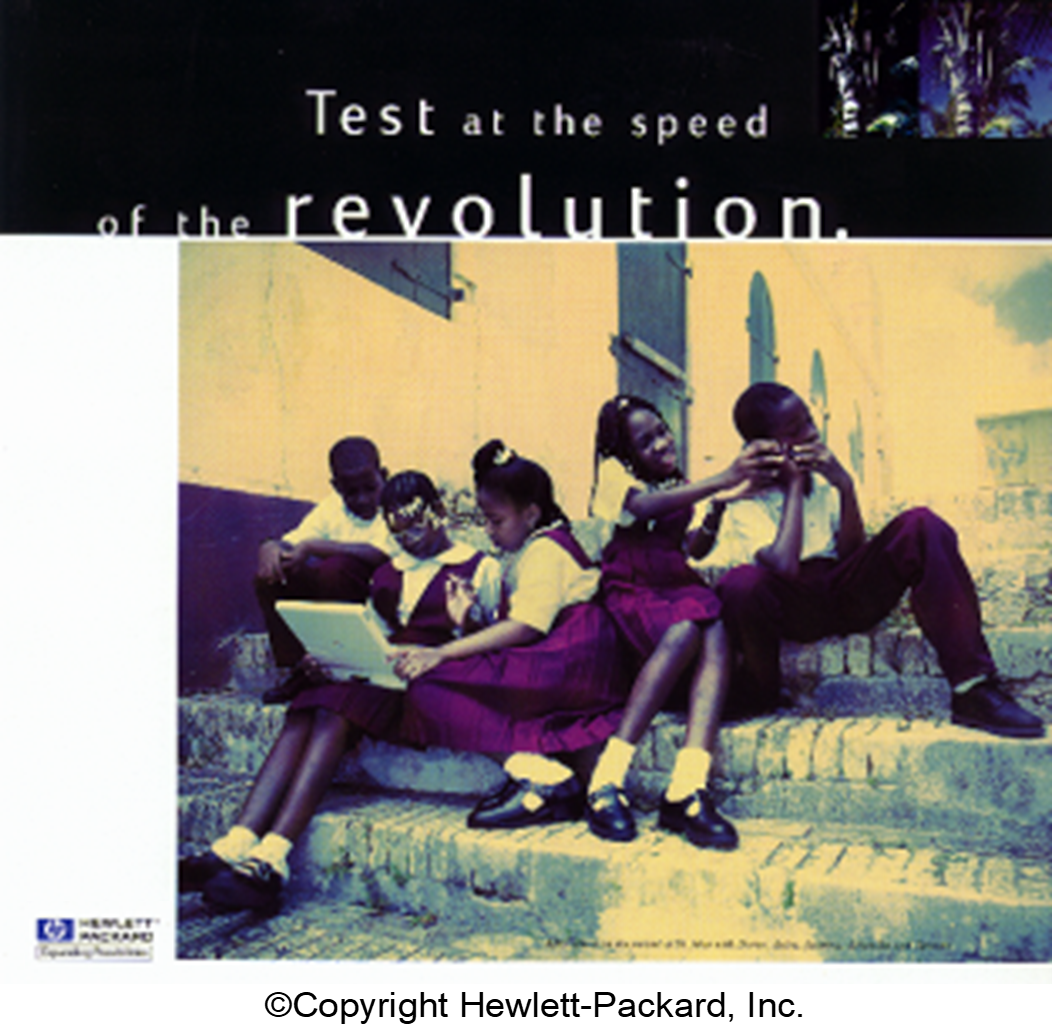

Take a look at the market research project we performed for HP to help create a new marketing campaign. As part of our efforts, we chose to employ very perceptive researchers to spend time with worldwide HP engineers as well as engineers from other companies.

This resulted in our researchers discovering that HP engineers showed greater qualities of “mentorship” than other engineers. Yes, conducting their own technical work was important but just as significant for them was the opportunity to impart to others, especially younger people, what they were doing and why what they were doing was important. This deeper level of understanding led the way for a different approach to expressing the meaning of the HP brand for people and ultimately resulted in the award-winning and profitable “Mentor” campaign.

If you’re tired of the hype about AI-generated market research results and would like more thoughtful and original solutions for your brand, choose the high level of intuitive, interpretive, and synthesis-building thinking Cascade Strategies brings to the table. Please visit https://cascadestrategies.com/ to learn more about Cascade Strategies and more examples of our better thinking for clients.